First, we will outline an $$$\mathcal O(N^2)$$$ solution. We can view the original list as separate singleton intervals of elements, and the merge operation as merging intervals. For example, if we start with $$$[[1, 1], [2, 2], [3, 3], [4, 4]]$$$. Merging the first two elements, we will get $$$[[1, 2], [3, 3], [4, 4]]$$$. We then add the sum of the elements in the new interval to our score. From here on, let $$$sum(l, r)$$$ denote the sum of the elements from $$$[l, r]$$$ in $$$A$$$.

By linearity of expectation, it suffices for every possible interval $$$[l, r]$$$ to find the probability we will form this interval at some point. When we merge two consecutive intervals $$$[a, b], [b+1, c]$$$, we note we will always merge between two consecutive indices ($$$b, b+1$$$). Note that between two adjacent elements indexed at $$$i, i+1$$$, we will merge this gap exactly once by the end of the entire game. Therefore, if we form $$$[l, r]$$$ at some point, then all of the gaps in the range $$$[l, r]$$$ must be merged before the elements indexed at $$$l-1, l$$$ and the gap at $$$r, r+1$$$. Say there are $$$bad$$$ number of gaps at the end points ($$$l-1, l$$$, or $$$r, r+1$$$). $$$bad$$$ is always in $$$[0, 2]$$$, and it's easy to see that we can just check whether $$$l = 0$$$ or $$$r = N - 1$$$. Then let $$$good$$$ be the number of gaps between $$$[l, r]$$$, which is $$$r-l$$$. We want all of good gaps to be merged before the bad gaps. The probability this happens is $$$\frac 1{\binom{good+bad}{bad}}$$$. Thus for each subarray, its contribution is $$$\frac{sum(l, r)}{\binom{good+bad}{bad}}$$$, and we can compute this in $$$\mathcal O(1)$$$. We can sum this across all subarrays in $$$\mathcal O(N^2)$$$. But note that we have to subtract/ignore the subarrays of length $$$1$$$, which is easy to do.

Now we will speed up this computation. First, we can take care of the subarrays containing and endpoint at $$$0$$$ or $$$N-1$$$. There are $$$\mathcal O(N)$$$ of them, and we can calculate their contribution in $$$\mathcal O(1)$$$ each. Now we assume $$$1 \leq l, r \leq N-2$$$. For any subarray satisfying this, $$$bad = 2$$$. Thus, for any subarray of this form, we want to find the sum of

To compute this sum, we solve another problem. Consider an array $$$a$$$ with a cost array $$$cost$$$, both of length $$$n$$$. Then for any subarray of $$$s$$$, we sum up $$$(\text{sum of elements in }s)\cdot cost[\text{length of }s]$$$. Think of this $$$cost$$$ array as the cost of a subarray based on length. To compute this sum, we would have our $$$a$$$ array be $$$A[1], \dots, A[N-1]$$$. Our cost function would be $$$\frac 1{\text{length of subarray}}$$$ and $$$\frac 1{\text{length of subarray+1}}$$$, and we could subtract these two values and multiply by $$$2$$$. Thus, we simply have to solve this subproblem in $$$\mathcal O(n)$$$ (of course, we can subtract out the contributions subarrays of length $$$1$$$ from our final answer as we don't need them).

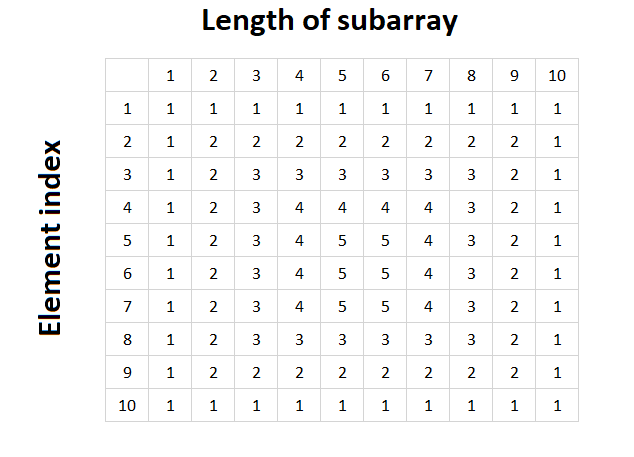

Now consider the array $$$a$$$ and cost array $$$cost$$$ both of length $$$n$$$. I've been using $$$0$$$ indexing thus far, but now let's switch to $$$1$$$ indexing cause it makes this easier. Let's figure out how much each individual element contributes. To do this, we must know how many times it occurs in a subarray of length $$$l$$$. I think this is best illustrated with the case of $$$n = 10$$$ through this table. This table stores the number of time the element at index $$$i$$$ occurs in a subarray of length $$$l$$$.

The Using the observed patterns in this table which are easily provable, you can compute everything with prefix sums. The contribution of the first element is just a sum of all of elements of the cost array. The second element's contributions is the first element's cost sum plus an additional subarray in the middle. You can compute all of the contributions of each element in $$$\mathcal O(n)$$$, and summing up yields the solution.

Thus, the overall solution runs in $$$\mathcal O(N)$$$.

For more details, check my code here.