For a better experience please click here.

Link to the question: Luogu, AtCoder

Preface

The very first generating function and polynomial problem solved in my life!

This blog is a detailed explanation and extension of the official editorial. I will try my best to explain the mathematical expressions and their deeper meanings so that you may understand if you are also new to generating functions and polynomials

Our Goal

Let $$$X$$$ be our random variable, which is the number of rolls after which all $$$N$$$-sides have shown up once for the first time. Its probability mass function $$$p_i=\mathbb P(X=i)$$$ is just the probability that all $$$N$$$-sides have shown up at exactly $$$i$$$-th roll.

Then, what we are looking for is

$$$\mathbb E(X)=\sum_{i=0}^\infty ip_i=0p_0+1p_1+2p_2+\cdots$$$

$$$\mathbb E(X^2)=\sum_{i=0}^\infty i^2p_i=0^2p_0+1^2p_1+2^2p_2+\cdots$$$

$$$\mathbb E(X^3)=\sum_{i=0}^\infty i^3p_i=0^3p_0+1^3p_1+2^3p_2+\cdots$$$

$$$\vdots$$$

$$$\mathbb E(X^M)=\sum_{i=0}^\infty i^Mp_i$$$

(Actually, $$$p_i=0$$$ if $$$i<n,$$$ but it doesn't matter.)

Derivation: Generating Functions

Ordinary and Exponential Generating Functions

For a sequence $$${a_i}_{i=0}^\infty,$$$ there are two formal power series associated with it:

- Its Ordinary Generating Function (OGF) is

and we denote it $$${a_i}_{i=0}^\infty\xrightarrow{\text{OGF}}f(x).$$$ * Its Exponential Generating Function (EGF) is

and we denote it $$${a_i}_{i=0}^\infty\xrightarrow{\text{EGF}}F(x).$$$

We can see that the EGF of $$${a_i}_{i=0}^\infty$$$ is just the OGF of $$${\frac{a_i}{i!}}_{i=0}^\infty.$$$

Probability Generating Functions

In particular, if the sequence $$${p_i}_{i=0}^\infty$$$ is the probability mass function of a discrete random variable $$$X$$$ taking non-negative integer values, then its OGF is also called the Probability Generating Function (PGF) of the random variable $$$X,$$$ written

Our first goal is to find the PGF of our random variable $$$X,$$$ and then we will show how to use that function to derive the final answer.

Finding the PGF of $$$X$$$

It is difficult to consider "**the first time** when all $$$N$$$-sides have shown", so we drop that condition. We continue rolling, not stopping when all $$$N$$$-sides have already shown up, and let $$$a_i$$$ be the probability that all $$$N$$$-sides have shown up after $$$i$$$ rolls.

Then, we have $$$p_i=a_i-a_{i-1}.$$$ This is because subtracting the former term is equivalent to subtracting the probability that all $$$N$$$-sides have shown up before the $$$i$$$-th roll, and the probability that all $$$N$$$-sides have shown up for the first time at exactly the $$$i$$$-th roll remains.

We try to find the OGF of $$${a_i}_{i=0}^\infty.$$$

(A subtlety: although $$$a_i$$$ stores the probability of something, its OGF is not a PGF because $$$a_i$$$ is not a probability mass function, but just a tool for us to find $$$p_i.$$$)

However, it is easier to find its EGF first than to find its OGF directly. This is due to the properties of products of OGFs and EGFs.

Products of OGFs and EGFs

Let $$${a_i}_{i=0}^\infty$$$ and $$${b_i}_{i=0}^\infty$$$ be sequences.

OGFs

Let $$$f(x)=\sum_{i=0}^\infty a_ix^i,g(x)=\sum_{i=0}^\infty b_ix^i$$$ be their OGFs, then its product

is the OGF of $$${c_i}_{i=0}^\infty,$$$ where $$$c_i=\sum_{k=0}^i a_kb_{i-k}.$$$

The way to understand its meaning is: let $$$a_i$$$ be the number of ways to take $$$i$$$ balls from a box, and $$$b_i$$$ be the number of ways to take $$$i$$$ balls from another box, then $$$c_i$$$ is the number of ways to take a total of $$$i$$$ balls from the two boxes.

Indeed, you can take $$$k$$$ balls from the first box, with $$$a_k$$$ ways, and $$$i-k$$$ balls from the second box, with $$$b_{i-k}$$$ ways. So, the number of ways to take $$$i$$$ balls from the first box and $$$i-k$$$ balls from the second box is $$$a_ib_{i-k},$$$ and you sum over all possible $$$k,$$$ which is from $$$0$$$ to $$$i.$$$

EGFs

Let $$$F(x)=\sum_{i=0}^\infty a_i\frac{x^i}{i!},G(x)=\sum_{i=0}^\infty b_i\frac{x^i}{i!}$$$ be their EGFs, then its product

is the EGF of $$${d_i}_{i=0}^\infty,$$$ where $$$d_i=\sum_{k=0}^i \binom{i}{k}a_kb_{i-k}.$$$

The difference between the products of OGFs and EGFs is a binomial number. The way to understand its meaning is: let $$$a_i$$$ be the number of ways to take $$$i$$$ balls from a box and align them in some order, and $$$b_i$$$ be the number of ways to take $$$i$$$ balls from another box and align them in some order, then $$$c_i$$$ is the number of ways to take a total of $$$i$$$ balls from the two boxes and align them in some order.

Similarly, the number of ways to take $$$i$$$ balls from the first box in some order and $$$i-k$$$ balls from the second box in some order is $$$a_ib_{i-k}.$$$ Next, you have $$$\binom{i}{k}$$$ ways to choose $$$k$$$ slots from the $$$i$$$ slots for the balls from the first box. Thus, the total way to choose and align them is $$$\binom{i}{k}a_kb_{i-k}.$$$

When we roll the dice, we get a sequence of the side that shows up at each time, so there is an order. That's why it is easier to find the EGF of $$${a_i}_{i=0}^\infty.$$$

When we roll the dice once, each face shows up with probability $$$\frac{1}{N}.$$$ If we consider a specific side, for example, $$$1,$$$ then the probability of getting all $$$1$$$'s in $$$i$$$ rolls is $$$\frac{1}{N^i}.$$$ The EGF of the probability of getting all $$$1$$$'s in $$$i$$$ rolls is therefore

Note that we drop the case $$$i=0$$$ because we want that side to show up at least once.

Symmetrically, all $$$N$$$-sides have the same EGF. And the EGF of the probability of getting all $$$N$$$-sides in $$$i$$$ rolls is

We are just taking the product of the EGF corresponding to each side. As they are EGFs, their product automatically deals with the order of the sides that show up.

An example

If the derivation above seems a bit non-intuitive, we may verify it with $$$N=2,$$$ a dice with two sides.

Trivially, $$$a_0=a_1=0.$$$

If we roll the dice twice, then $$$12,21$$$ are two ways that make both sides show up. There are in total $$$4$$$ equally possible results ($$$11,12,21,22$$$), so $$$a_2=\frac{2}{4}=\frac{1}{2}.$$$

If we roll the dice three times, then $$$112,121,211,221,212,122$$$ are the ways to get both sides showing up, so $$$a_3=\frac{6}{8}=\frac{3}{4}.$$$

Similarly, $$$a_4=\frac{14}{16}=\frac{7}{8}.$$$

Therefore, $$${a_i}_{i=0}^\infty \xrightarrow{\text{EGF}}F(x)=\sum_{i=0}^\infty a_i\frac{x^i}{i!}$$$

$$$=0+0x+\frac{1}{2!}\cdot \frac{1}{2}x^2+\frac{1}{3!}\cdot \frac{3}{4}x^3+\frac{1}{4!}\cdot\frac{7}{8}x^4+\cdots$$$

$$$=\frac{1}{4}x^2+\frac{1}{8}x^3+\frac{7}{192}x^4+\cdots.$$$

Using our formula, $$$(e^\frac{x}{2}-1)^2=(\frac{1}{2}x+\frac{1}{4}\cdot\frac{x^2}{2!}+\frac{1}{8}\cdot\frac{x^3}{3!}+\cdots)^2$$$

$$$=(\frac{1}{2}x+\frac{1}{8}x^2+\frac{1}{48}x^3+\cdots)^2$$$

$$$=\frac{1}{4}x^2+\frac{1}{8}x^3+\frac{7}{192}x^4+\cdots$$$

which matches with our "brute-forcely" calculated $$$F(x).$$$

Now that we have the EGF of $$${a_i},$$$ we convert it to its OGF.

Conversion between OGFs and EGFs

There are two laws:

- If

($$$f(x)$$$ and $$$F(x)$$$ are the OGF and EGF of the same sequence) and

then

This law tells us there is a sense of 'linearity' between sequences and their GFs. When doing conversions, we can deal with separate terms and add them up.

- For all constant $$$k,$$$

The OGF is a geometric series and the EGF is the exponential function.

With the two rules above, we have $$$F(x)=(e^\frac{x}{N}-1)^N$$$

$$$=\sum_{r=0}^N\binom{N}{r}(-1)^{N-r}e^{\frac{rx}{N}}\xleftarrow{\text{EGF}} {a_i}\xrightarrow{\text{OGF}}f(x)=\sum_{r=0}^N\binom{N}{r}(-1)^{N-r}\frac{1}{1-\frac{rx}{N}}.$$$

And finally, we compute the PGF of $$${p_i}_{i=0}^\infty,$$$ which is $$$g(x)=\sum_{i=0}^\infty p_ix^i$$$

$$$=a_0+\sum_{i=1}^\infty (a_i-a_{i-1})x^i$$$ (since $$$p_i=a_i-a_{i-1}$$$)

$$$=\sum_{i=0}^\infty a_ix^i-\sum_{i=0}^\infty a_ix^{i+1}$$$

$$$=f(x)-xf(x)$$$

$$$=(1-x)f(x)$$$

(Note: multiplying an OGF by $$$1-x$$$ is the same as subtracting each term in the sequence by its former term. On the other hand, its inverse action, multiplying by $$$\frac{1}{1-x},$$$ is the same as taking the prefix sum of each term.)

Though it is a 'nasty' formula, we will show later how to compute it in a code.

Spoil alert: there is a much easier derivation of $$$g(x)$$$ at the end of this blog.

Here is the final step: Calculating the expected value of $$$X,X^2,X^3,\cdots$$$ from the PGF.

Moment Generating Functions

Similar to PGF, the OGF of a probability mass function, the Moment Generating Function (MGF) is the EGF of something else.

The MGF of a random variable $$$X$$$ is

Here are some algebraic manipulations:

which is exactly the EGF of our answer!

(Note: actually the summation with expected values is a more general definition of MGF, as it can be defined for random variables that are not only taking values of non-negative integers.)

Lastly, for the random variable $$$X$$$ taking the value of non-negative integers, like in our problem, we have

by definition.

Therefore, our final goal is to find the coefficients up to $$$x^M$$$ of the MGF of $$$X,$$$ which is $$$g(e^x).$$$

Implementation: Convolutions

Prerequisites: Convolution and inverse series.

In the implementation, I used the class modint998244353 and convolution() from Atcoder Library for calculations in $$$\bmod 998244353$$$ and FFT.

For how FFT works and more, see this blog.

We do this by explicitly calculating the PGF $$$g(x),$$$ and then the MGF $$$g(e^x).$$$

Calculating $$$g(x)$$$

We have the explicit formula

The summation $$$\sum_{r=0}^N\binom{N}{r}(-1)^{N-r}\frac{1}{1-\frac{rx}{N}}$$$ can be written as a rational function $$$\frac{p(x)}{q(x)},$$$ with $$$p(x)$$$ and $$$q(x)$$$ each a polynomial with degree at most $$$N+1.$$$

As it is the sum of a bunch of fractions in the form $$$\frac{a}{1-bx},$$$ we may combine them in some order using convolution().

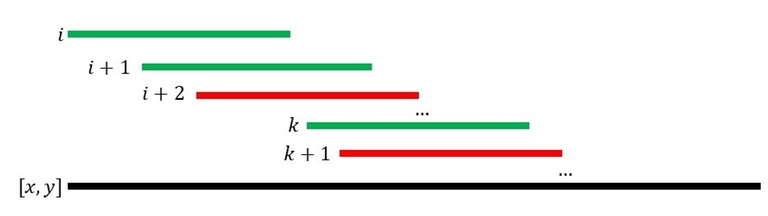

By FFT, the time complexity of multiplying two polynomials is $$$O(n\log n),$$$ where $$$n$$$ is the higher degree of the polynomials. So, the best way to combine the fractions is by Divide-and-Conquer: Divide the summations in half, calculate each half to get a rational function, and then combine the two rational functions.

Here is the class of rational functions and its addition method:

using mint=modint998244353; //calculation in mod 998244353

using ply=vector<mint>; //polynomials

struct R{ply p,q; //numerator and denominator

R operator+(R b){

ply rp(add(convolution(q,b.p),convolution(p,b.q))),

rq(convolution(q,b.q));

return{rp,rq};

}

};

ply add(ply a,ply b){ //adding two polynomials

if(a.size()<b.size())swap(a,b);

Frn0(i,0,b.size())a[i]+=b[i];

return a;

}

Here is the divide-and-conquer summation of rational functions, stored in vector<R>a.

R sum(vector<R>&a,int l,int r){ //summing from a[l] to a[r]

if(l==r)return a[l];

int md((l+r)/2);

return sum(a,l,md)+sum(a,md+1,r);

}

The summation is done. For the remaining factor $$$1-x,$$$ there are two ways:

- Multiply it by the numerator. This can be done by subtracting each term by its former term. Note that the degree will increase by $$$1.$$$

- (used here) As the denominator already has a $$$1-x$$$ factor (check the summands), we can remove this factor by taking the prefix sum of each term, which is the same as multiplying $$$\frac{1}{1+x}=1+x+x^2+x^3+\cdots.$$$

And now, we obtain the PGF $$$g(x)$$$ as a rational function.

From $$$g(x)$$$ to $$$g(e^x)$$$

As $$$g(x)=\frac{p(x)}{q(x)}$$$ is a rational function. We calculate $$$p(e^x)$$$ and $$$q(e^x)$$$ separately and use inverse series to combine them. As we only need the coefficients from $$$x$$$ to $$$x^M,$$$ we may take the results $$$\bmod x^{M+1}.$$$

--- For a polynomial $$$P(x)=\sum_{i=0}^n c_ix^i, P(e^x)=\sum_{i=0}^n c_ie^{ix}.$$$

Using our trick of conversion between EGFs and OGFs again:

So we may calculate the summation on the right hand side by the same Divide-and-Conquer technique. Use inverse series to get its coefficients in power series, and then divide the $$$i$$$-th term by $$$i!$$$ to obtain the left hand side.

The following is an implementation of inverse series $$$\bmod x^m.$$$

ply pinv(ply f,int m){

ply g({f[0].inv()});

f.resize(m);

for(int s(2);s<2*m;s<<=1){

auto tmp(convolution(convolution(g,g),

ply(f.begin(),f.begin()+min(s,m))));

g.resize(min(s,m));

Frn0(i,0,min(s,m))g[i]=2*g[i]-tmp[i];

}

return g;

}

The following is calculating $$$f(e^x)\bmod x^m.$$$

ply fex(ply f,int m){

vector<R>a(f.size());

Frn0(i,0,f.size())a[i].p={f[i]},a[i].q={1,-i};

R s(sum(a,0,a.size()-1)); //DC summation

auto re(convolution(s.p,pinv(s.q,m)));

re.resize(m);

Frn0(i,0,m)re[i]/=fc[i]; //dividing by i!

return re;

}

Code

Time Complexity: $$$O(n\log ^2n +m\log m)$$$ (DC summation and inverse series)

Memory Complexity: $$$O(n+m)$$$

Further details are annotated.

//This program is written by Brian Peng.

#include<bits/stdc++.h>

#include<atcoder/convolution>

using namespace std;

using namespace atcoder;

#define Rd(a) (a=rd())

#define Gc(a) (a=getchar())

#define Pc(a) putchar(a)

int rd(){

int x;char c(getchar());bool k;

while(!isdigit(c)&&c^'-')if(Gc(c)==EOF)exit(0);

c^'-'?(k=1,x=c&15):k=x=0;

while(isdigit(Gc(c)))x=x*10+(c&15);

return k?x:-x;

}

void wr(int a){

if(a<0)Pc('-'),a=-a;

if(a<=9)Pc(a|'0');

else wr(a/10),Pc((a%10)|'0');

}

signed const INF(0x3f3f3f3f),NINF(0xc3c3c3c3);

long long const LINF(0x3f3f3f3f3f3f3f3fLL),LNINF(0xc3c3c3c3c3c3c3c3LL);

#define Ps Pc(' ')

#define Pe Pc('\n')

#define Frn0(i,a,b) for(int i(a);i<(b);++i)

#define Frn1(i,a,b) for(int i(a);i<=(b);++i)

#define Frn_(i,a,b) for(int i(a);i>=(b);--i)

#define Mst(a,b) memset(a,b,sizeof(a))

#define All(a) (a).begin(),(a).end()

#define File(a) freopen(a".in","r",stdin),freopen(a".out","w",stdout)

using mint=modint998244353;

using ply=vector<mint>;

#define N (200010)

int n,m;

mint fc[N]{1};

ply ans;

ply pinv(ply f,int m);

ply add(ply a,ply b);

struct R{ply p,q;

R operator+(R b){

ply rp(add(convolution(q,b.p),convolution(p,b.q))),

rq(convolution(q,b.q));

return{rp,rq};

}

}g;

vector<R>a;

R sum(vector<R>&a,int l,int r);

mint cmb(int n,int r){return fc[n]/(fc[r]*fc[n-r]);} //binomial numbers

ply fex(ply f,int m);

signed main(){

Rd(n),Rd(m);

Frn1(i,1,max(n,m))fc[i]=fc[i-1]*i; //factorials

a.resize(n+1);

mint niv(mint(n).inv());

Frn1(i,0,n){

a[i].p={(((n-i)&1)?-1:1)*cmb(n,i)};

a[i].q={1,-niv*i};

} //the terms of the summation in g(x)

g=sum(a,0,n);

Frn0(i,1,g.q.size())g.q[i]+=g.q[i-1]; //denominator dividing 1-x

//by taking prefix sums, obtaining PGF

ans=convolution(fex(g.p,m+1),pinv(fex(g.q,m+1),m+1));

//obtaining MGF

Frn1(i,1,m)wr((ans[i]*fc[i]).val()),Pe;

//remember to multiply by i! as it is an EGF

exit(0);

}

ply pinv(ply f,int m){

ply g({f[0].inv()});

f.resize(m);

for(int s(2);s<2*m;s<<=1){

auto tmp(convolution(convolution(g,g),

ply(f.begin(),f.begin()+min(s,m))));

g.resize(min(s,m));

Frn0(i,0,min(s,m))g[i]=2*g[i]-tmp[i];

}

return g;

}

ply add(ply a,ply b){

if(a.size()<b.size())swap(a,b);

Frn0(i,0,b.size())a[i]+=b[i];

return a;

}

R sum(vector<R>&a,int l,int r){

if(l==r)return a[l];

int md((l+r)/2);

return sum(a,l,md)+sum(a,md+1,r);

}

ply fex(ply f,int m){

vector<R>a(f.size());

Frn0(i,0,f.size())a[i].p={f[i]},a[i].q={1,-i};

R s(sum(a,0,a.size()-1));

auto re(convolution(s.p,pinv(s.q,m)));

re.resize(m);

Frn0(i,0,m)re[i]/=fc[i];

return re;

}

Extensions

An alternative way to find the PGF of $$$X$$$

We may track the number of rolls to get a new side showing up when $$$i$$$ sides have already shown up.

When $$$i$$$ sides have already shown up, the probability of getting a new side in a roll is $$$\frac{n-i}{n}.$$$ Let $$$X_i$$$ be the random variable of the number of rolls, then $$$X_i\sim \text{Geo}(\frac{n-i}{n}).$$$

As the PGF of $$$\text{Geo}(p)$$$ is $$$\frac{px}{1-(1-p)x},$$$ the PGF of $$$X_i$$$ is $$$G_{X_i}(x)=\frac{\frac{n-i}{n}x}{1-\frac{i}{n}x}=\frac{(n-i)x}{n-ix}.$$$ By Convolution Theorem of PGF, the PGF of the total number of rolls $$$X=\sum_{i=0}^{n-1} X_i$$$ is

It seems to be a lot easier to do... So the product of these small polynomials can still be done by a similar Divide-and-Conquer method.

//This program is written by Brian Peng.

#include<bits/stdc++.h>

#include<atcoder/convolution>

using namespace std;

using namespace atcoder;

#define Rd(a) (a=rd())

#define Gc(a) (a=getchar())

#define Pc(a) putchar(a)

int rd(){

int x;char c(getchar());bool k;

while(!isdigit(c)&&c^'-')if(Gc(c)==EOF)exit(0);

c^'-'?(k=1,x=c&15):k=x=0;

while(isdigit(Gc(c)))x=x*10+(c&15);

return k?x:-x;

}

void wr(int a){

if(a<0)Pc('-'),a=-a;

if(a<=9)Pc(a|'0');

else wr(a/10),Pc((a%10)|'0');

}

signed const INF(0x3f3f3f3f),NINF(0xc3c3c3c3);

long long const LINF(0x3f3f3f3f3f3f3f3fLL),LNINF(0xc3c3c3c3c3c3c3c3LL);

#define Ps Pc(' ')

#define Pe Pc('\n')

#define Frn0(i,a,b) for(int i(a);i<(b);++i)

#define Frn1(i,a,b) for(int i(a);i<=(b);++i)

#define Frn_(i,a,b) for(int i(a);i>=(b);--i)

#define Mst(a,b) memset(a,b,sizeof(a))

#define All(a) (a).begin(),(a).end()

#define File(a) freopen(a".in","r",stdin),freopen(a".out","w",stdout)

using mint=modint998244353;

using ply=vector<mint>;

#define N (200010)

int n,m;

mint fc[N]{1};

ply ans;

ply pinv(ply f,int m);

ply add(ply a,ply b);

struct R{ply p,q;

R operator+(R b){

ply rp(add(convolution(q,b.p),convolution(p,b.q))),

rq(convolution(q,b.q));

return{rp,rq};

}

}g;

vector<ply>a;

R sum(vector<R>&a,int l,int r);

ply prod(vector<ply>&a,int l,int r); //DC Multiplication

ply fex(ply f,int m);

signed main(){

Rd(n),Rd(m);

Frn1(i,1,max(n,m))fc[i]=fc[i-1]*i;

g.p.resize(n+1),g.p[n]=fc[n],a.resize(n);

Frn0(i,0,n)a[i]={n,-i};

g.q=prod(a,0,n-1);

ans=convolution(fex(g.p,m+1),pinv(fex(g.q,m+1),m+1));

Frn1(i,1,m)wr((ans[i]*fc[i]).val()),Pe;

exit(0);

}

ply pinv(ply f,int m){

ply g({f[0].inv()});

f.resize(m);

for(int s(2);s<2*m;s<<=1){

auto tmp(convolution(convolution(g,g),

ply(f.begin(),f.begin()+min(s,m))));

g.resize(min(s,m));

Frn0(i,0,min(s,m))g[i]=2*g[i]-tmp[i];

}

return g;

}

ply add(ply a,ply b){

if(a.size()<b.size())swap(a,b);

Frn0(i,0,b.size())a[i]+=b[i];

return a;

}

R sum(vector<R>&a,int l,int r){

if(l==r)return a[l];

int md((l+r)/2);

return sum(a,l,md)+sum(a,md+1,r);

}

ply prod(vector<ply>&a,int l,int r){

if(l==r)return a[l];

int md((l+r)/2);

return convolution(prod(a,l,md),prod(a,md+1,r));

}

ply fex(ply f,int m){

vector<R>a(f.size());

Frn0(i,0,f.size())a[i].p={f[i]},a[i].q={1,-i};

R s(sum(a,0,a.size()-1));

auto re(convolution(s.p,pinv(s.q,m)));

re.resize(m);

Frn0(i,0,m)re[i]/=fc[i];

return re;

}

It is really easier to implement, and took 300ms less time than the previous one...

THANKS FOR READING!