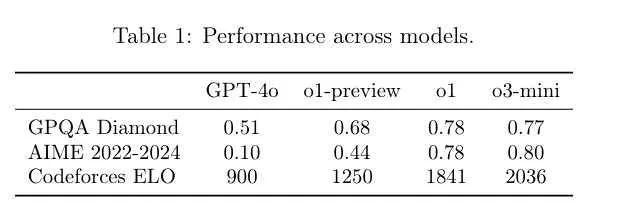

Apparently, it's codeforces performance beats both o1-mini, o1 and deepseek r1 in codeforces rating:

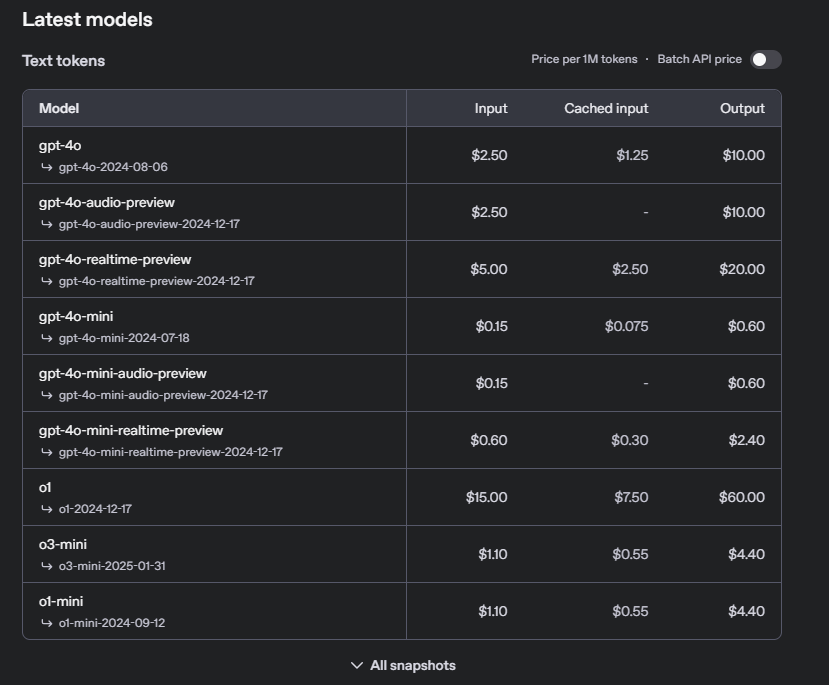

It's price is on par with o1-mini, I guess they felt the heat from deepseek r1:

| # | User | Rating |

|---|---|---|

| 1 | tourist | 3857 |

| 2 | jiangly | 3747 |

| 3 | orzdevinwang | 3706 |

| 4 | jqdai0815 | 3682 |

| 5 | ksun48 | 3591 |

| 6 | gamegame | 3477 |

| 7 | Benq | 3468 |

| 8 | Radewoosh | 3463 |

| 9 | ecnerwala | 3451 |

| 10 | heuristica | 3431 |

| # | User | Contrib. |

|---|---|---|

| 1 | cry | 165 |

| 2 | -is-this-fft- | 161 |

| 3 | Qingyu | 160 |

| 4 | Dominater069 | 158 |

| 5 | atcoder_official | 157 |

| 6 | adamant | 155 |

| 7 | Um_nik | 151 |

| 8 | djm03178 | 150 |

| 8 | luogu_official | 150 |

| 10 | awoo | 148 |

| Name |

|---|

o1 is claimed to be 1800 and it fails div2b, i'm suspicious of this

which round is it from may i ask?

I remember someone posting a blog about how o1-pro (the 200$ a month version) couldn't solve 2040B - Paint a Strip, but o3-mini can solve it :(

Maybe just because o1 doesn't include it in a training data while o3 does? (round happened right in between their releases)

I would honestly doubt that the training data cutoff is that recent, though I could be wrong, which I do hope

most of the rounds

I just tested it on all the problems that Deepseek R1 failed that I had tested (from https://codeforces.net/blog/entry/138735 ), it solved all of them (though it took 2 attempts on Maximum AND Queries (Easy version)). I also tested it on Paint a Strip, which o1-pro (which was 200$) wasn't able to solve.

I'm also on the free plan, meaning my o3-mini is on low compute (if it means anything, it also has way shorter wait times)

nvm

the edit...

It's nice to know that the lower rated problems aren't entirely screwed, though it's a bit nerve wracking seeing it solve problems on the free plan that it used to not be able to solve on the 200$ plan

Source

Note: o3-mini has already achieved a rating(allegedly) of 2130 (above cf master) with the setting set to high reasoning.

If you have access to this model, please try this question — 2060D - Subtract Min Sort. This is a simple 1100 rated problem that DeepSeek-R1 gets wrong despite me giving it more than enough hints.

(update: I tried it myself. It failed on first but after giving it a very obvious hint, it was able to solve it. https://chatgpt.com/share/679e03a1-e82c-8004-b362-a745b412bf52)

first try 303911410

Yeah both O3 mini and deepseek got it in first try

solution given by O3 mini Solution given by deepseek

cf is cooked

well its not gpt-o7 yet xdd

2036 is medium version. High version is 2130. Only 2400 people higher right now.

I tried o3-mini-high on last completed round. I tried for A, B, C, D, E1.

Results:

A -> solved in 1m 12s

B -> solved in 2m 17s

C -> WA on test 2 (surprising, I thought it'll be able crack this)

D -> WA on test 1

E1 -> WA on test 2

Sometimes

o3-mini-lowis better thano3-mini-highIt solved C in one-shot 303999547

it's not necessarily better. you probably got lucky with the sampling.

Gpt o3 mini low is really insane, it has solved almost all the problem i've asked upto 1900 rated.It's going to ruin all the fun. I think codeforces rating system just got cooked,as free users can access it.Contests should be unrated untill they find any solutions.

were the problems old?

I have access to R1 Pro and it runs out of token before answering any problems. LOL

Bruh, o3 will beat me for sure...

I don't think so, these models most probably solving problems whose solutions are already available on internet.