Hi! Sorry for this rather click-baity title, but if you're reading this, you likely care about making Codeforces better. And so do I! And so, I've made a survey...

Motivation

Some of the recent rounds, especially Div1 have been rather poorly received. Technocup and ByteDance rounds have been downvoted like hell, other rounds don't have particularly impressive contribution either. GR18 also has received a lot of criticism and as far as I remember had more upvotes before its start due to PurpleCrayon's incredibly wholesome announcement. There seems to be something wrong; people rarely compliment the writers for the bits they enjoyed which seems to draw an overall picture of rather unhappy audience.

So, what's wrong exactly?

Well, I know what I don't like, but it of course may differ for you. For me, the spirit animal of modern competitive programming is a snake that grew so large it started biting its own tail. It's been around for a while, and more and more things are considered standard. Problemsetters, afraid of being downvoted for putting standard problems or accused of copying are inventing increasingly bizarre adhoc problems, especially as easy tasks. And suddenly we're ending up with atrocities like 1615D - X(or)-mas Tree. Parity of popcount of xor on paths on tree, really? Most of the "problem-solving" in such tasks is removing whatever garbage is in the statement. Or guessing the right way to approach a deeply complicated process. I can't count how many times I have recently solved a problem by guessing "if this problem's position is X, the solution must be...". Or brute-forcing to find a pattern (but that goes more for AtCoder tasks, I guess). Anyway, I've been quite annoyed and wondering whether others also feel the same.

The survey

https://forms.gle/x6wVQvQVSQk6zUiv9

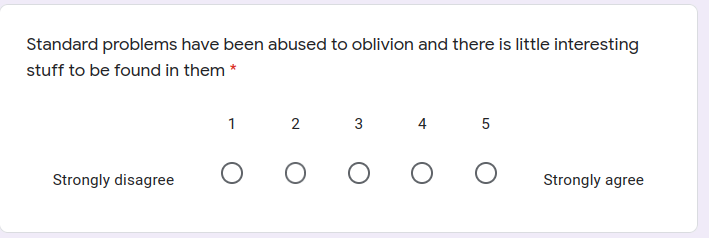

If you would like to help making Codeforces better, please consider filling in the survey. It's short, and won't take you more than 10 minutes. It states a bunch of statements expressing different viewpoints about competitive programming, (like the one in the blog title), and asks you to rate each one in a scale from 1 to 5. Thanks to Monogon, tnowak, Linkus and Amtek for proofreading and question suggestions.

I hope that a sufficient number of participants expresses their views for the results to be statistically significant. After Hello 2022 I will close the survey, analyze the answers and probably compile a summary blog. If you've always been afraid of saying your opinions and being downvoted (#ratism), now it's the time to do it! I'll be very thankful, your voice really matters. Ultimately, it's the community who keeps Codeforces running.

Please, please, please, share your thoughts!

UPD

The survey hit 128 responses in just a couple of hours, can we get to a bigger power of two?

UPD 2

We now have more than 256 responses. I'm proud of us all :) Some people wrote essays longer than this blog or the survey, legends. We also have 5 tourists, so I think I'll do some postprocessing before sharing...

UPD 3

The survey closes tomorrow after Hello 2022, if you're willing to contribute and forgotten, now is the time. And if you have done it already, thank you very much!

UPD 4

The survey is now closed with 329 responses. Lots of love to everyone who contributed. I'll try to write a summary blog tomorrow. After some cleanup, the data will also be available to download so that everyone can analyze on their own :)

I refuse to be silenced by the adhoc mafia.

I'm looking forward to the results of the poll.

Just a heads up, results are publicly viewable via 'see previous responses' -- so while I personally am fine with both picking the "don't quote me" option and typing out an unhinged rant of sorts, others might not feel the same and should know if they want to curb their responses in any way.

Hmm, you're right. I probably should disable viewing the answers, and anonymize the results of before sharing. I'll do that.

It's quite hard to match a person to their answers, even from the admin view, so I hope users weren't affected that much till now

kpw29 does that mean that developing intuition for this problem was quite hard or something else??

I just meant it's really bizarre and unnatural. The problem itself is nice, but only after discarding a lot of unnecessary complications

Seriously people?

why? of course people want their training to be worth.

I don't understand why problems should be made such that you benefit from your training instead of you training in what is necessary to improve.

Precisely

I dont think this is true at all. Take last GR problem B for example. It's an easy interesting problem that uses a standard technique (prefix sums) in a interesting way, which not only was fun to solve but also differentiate those who can apply stardard problems and those who only know they exist. There are bad dumb standard problems and there are good ones.

Yes they have, but I feel like the real issues in these rounds is not clearly stated in the blog or the survey.

I didnt do bytedance but pretests look terrible on C (not only there are more fst than ever in my friend list, but seriously, take a look at tests 1-20 (which i believe to be pretests)).

Technocup had 3 copied problems, and the hardest problem was actually a relatively recent one from atcoder! This made it so not only more people getting ac on F (which a significant ammount of people remembered) than E, but also made more people skip E to try F, thinking its easier (as evidenced by the WA count). This shouldve been caught in testing (possibly it was but they didnt want to delay the round, or its even possible they straight up copied it). E also had weird constraints/timelimit that ended up serving no purpose except frustrating random people.

I believe global round had nothing majorly wrong aside from maybe a noticeable jump in difficulty from C to D, which made performances from high cyan (around 1550) to low master (around 2150) to be decided only by speed. I think the downvotes were from people who got screwed by this and downvoted (its a good range of people and i certainly did that when it happened to me).

I think the actual problem that cf contest have are balance issues and preparation issues. In this other technocup for example, Problem C wasnt on testing (at least by the time monogon was actually seeing the problems, according to him) and because of that it ended up being ridiculously easy (900 rated problem with the next being 2000) and with bad pretests. Problems div2D, div2F and div1E were great, however in contest i just did 3 super easy problems and then nothing, 0 fun.

Im all for delaying contests when something bad happens, as I do not want to think about random bullshit like pretests, balance, cheating or whatever, I just want to have fun solving problems.

(also i recommend using magic to turn green, the contribution becomes the same color as the rating, its funny)

EDIT: im a clown and answered the survey twice

Nice to see someone cares enough to write a long comment, thanks!

I tried to address problems in difficulty, topic balance, epeated problems or poor pretests, I hope it's enough. I would primarily like to know how the future rounds can be made better,

Your Honor, this is a suggestive question.

"Problemsetters who put math problems that have no connection to algorithms whatsoever should be ashamed of themselves; it's competitive programming, not IMO with a keyboard."

Hey, you can always disagree with the statement, I didn't give 'Yes' and 'Yes but in blue' options

One thing that can improve Codeforces is to have contests for rated range 0 — 2800 or 0 — 2600 like ARC.

I somehow didn't have a chance of participating in any CF for almost half a year, so I can't speak for them, but I got an impression that quality of cf problems is improving over time. Long time ago the hardest problems were almost always some shite suffix tree or complicated data structure, while I feel nowadays there are less "just standard technique" problems on all difficulty levels

I'm gonna have to strongly agree with this and I have a very fresh impression. I think I did around 200 virtual CF rounds in the last couple of months, and the older contests even from like 2014 have very different feeling to them. Problems CDE are quite often very straightforward and "standard" from the modern point of view. Not implying it was bad during that time, cause CP has definitely evolved since then. Please regard it just as my opinion, but I also think that problems became much more interesting and exciting on average.

I just want to say that I disagree with some of the assumptions of this blog like saying that "Standard problems have been abused to oblivion" or that 1615D — X(or)-mas Tree is an atrocity. This problem is not a particularly awesome problem but it is definitely ok and fun to solve — I would describe it as a known technique with the small twist of the "Parity of popcount". Knowing about the known technique behind it is probably the hardest part of the problem, but that is ok and trying to remember/reconstructing it during contest is a good exercise. Having the impression that we are on the verge of knowing it all/already invented everything is a common thought for many generations but it is honestly a bit naive.

About the argument that many people downvote contest announcement after contest is done is probably more related to people being tilted with their bad performance. What do you think the average rating delta of people who downvote announcement after contest is? Certainly under 0.

To summarise my opinion: Problems are getting better, but the proposed survey should still be useful.

How did you conclude that from the survey? I think it's rather that we exhausted tweaks of standard ideas. It doesn't help that some problems were inspired by older cp problems.

Recently I've had the experience of doing a 5h contest while thinking "time to read another problem... shit this problem also has 4 paragraphs of useless info, lemme try to guess where the problem actually starts" for most problems and I was completely right as I managed to skip the useless garbage.

So yes, if possible make the problem's difficulty be just the problem's difficulty, not some external shit like trying to enter the author's mind due to a shitty statement or a test of your patience to read through things that aren't related to the problem (and you most likely can realize it's not related as you're reading the actual problem).

I too want to see more data structure and dynamic programming problems in contest too. But I feel that it's crazy difficult to make such tasks anymore with it screaming THIS IS A DATA STRUCTURE PROBLEM. When was the last time you have seen a interesting data structure problem where it was not trivial/well-known how to apply data structures (perhaps after some ad-hoc observations)?

I enjoy data structure problems, but not if they are stupid things like "please combine different data structures and code it in a 2.5 hours contest with 7 problems" like 1608G - Alphabetic Tree. Due to how most people set problems (starting from the statement, not from the solution) interesting data structure problems are rare to come by and is hard for authors to create. If there is nothing interesting, then it is fine that data structures are rarely used.

So should the "I would like to see more data structures problems in contests" mean "I would like to see more suffix array with HLD"?

Also, I think using technocup and bytedance (and probably many sponsored contests) as examples is low hanging fruit because of how differently testing is done on these contests. I'm sure my experience on codeforces contest will be alot more positive if I only consider the non-sponsored rounds.

The survey is definitely helpful but I feel that using the number of downvotes on contest announcements as a way to evaluate the quality of their problems might be quite off the mark. Downvotes can also come from bad individual performances, weak pretests (lack of problem preparation) or huge difficulty gaps.

It's unrealistic to expect every single problem in a contest to be interesting and/or original. To make things worse, boring/bad problems sometimes has to be included to fill in the difficulty gap.

While there is certainly room for improvement, I think we all can be a bit more lenient on one or two bad problems in a contest as long as the entire problemset is of acceptable quality.

This. I feel like individuals (often newbies/pupils) tend to think of the round as "unbalanced" or "not beginner friendly" when their performance is bad. I think everybody should be aware of how hard it actually is to prepare a contest and not complain about the "free food" they have. Well, I understand that by each day, expectations from problemsetters grow but if there isn't a clear mistake about the contest (such as very weak pretests, suddenly adding extra 15 minutes or really really huge difficulty gaps), people should be understanding.

In interviews.... coding round is based on data structures mostly...idk why there are so many math problems!

Coding competitions are not the same as coding interviews, and I don't think that we should strive to make them similar either. We shouldn't modify CP to be similar to something with a completely different purpose. (Especially not for div1 problems... at that level nobody needs to practice for interviews). There are plenty of interview-focused practicing sites, CP is meant to be interesting.

I personally believe that a problem where the story makes sense and is relevant to the problem is nice. However when the story is something like "Alice and Bob were playing in the street when Bob found a strange box, he likes Alice and wants to give it to her as a gift. The box has a lock on it, and to unlock it you need to solve the following problem: formal defintion of the problem. Can you help Alice unlock the box?" it just wastes time that one could solve task A in.

Your blog title is bad. I almost missed this because its title screams "rant I won't care about."

I think several of the survey prompts would be better broken down into smaller pieces. For example: "I am unhappy when I encounter a problem requiring standard tricks, as it is not interesting" (1-5) -> "I am unhappy when I encounter a problem requiring standard tricks" (1-5), "Problems requiring standard tricks are usually uninteresting" (1-5), and maybe don't bother asking about the third component "I am unhappy when I encounter an uninteresting problem requiring standard tricks."

Whether or not I up-vote a round announcement after participating is mostly a function of whether or not I remember to, and is mostly unrelated to the relative quality of the round. Only a couple of the rounds I've participated in have even left doubt about whether the authors deserve my up-vote.

Can you be more explicit about what you don't like about 1615D? From my perspective there are a few potential annoyances in the problem itself:

I think (1) is totally benign. I think (2) is rather harmful. It's not a substantial enough complication to stand on its own in a problem of this difficulty, and it doesn't interact with the 'main ideas' of the problem in any interesting way. Even worse, by reducing the relevant vertex-labeling to a 0/1 labeling, it makes that 'main idea' far more likely to be a near-exact match to a contestant's pre-written code. (

is_bipartite, anyone?) Although (3) does annoy me, it's pretty minor. And some of the reasons (3) annoys me are niche enough that a round author who both is aware and cares would be a surprise. But although I see room for improvement and even personally decided not to bother implementing my solution after reading this problem during the contest, "atrocit[y]" seems like an unreasonably harsh assessment.