Hello! I received a great response from the Codeforces community, so now I am starting a series of educational posts.

Today I want to tell about a very useful classification of dynamic programming problems that I came up with through the years of practice. I don't claim the authorship of this idea, but still I've never seen it expressed explicitly, so I will introduce my own terms. Let's call it "Subtask DP" (or "Regular DP") and "Process-Simulation DP". Today I will focus on the latter.

The Regular approach

How were you introduced to DP? In which terms? When I was at school, I was explained that DP is just splitting a task into subtasks. First, you introduce parameters to your problem. A set of values for each parameter is called a state of the DP, which corresponds to a single subtask. Then you just need to find the answer for every state / subtask, and knowing the answer for the smaller subtasks shall help you with your current one. Let's look at an example:

AtCoder DP Contest: Problem L Two guys are playing on an array of numbers. They take turns in taking either the leftmost or the rightmost remaining element of the array until it's empty. Each one is trying to maximize the sum of elements he's taken. What will be the difference between the first and the second players' scores if they play optimally?

The solution to that problem is to introduce two parameters: l and r, with dp[l][r] being the answer to that exact problem if we only had the numbers from the interval [l, r). How do you count it? You just reduce a task to its subtask. There are two possible first moves for the first player: take the leftmost number or the rightmost number. In the first case we are left with the interval [l + 1, r), in the second case – with the interval [l, r - 1). But if we count the dp value for all intervals in the order of increasing length, then by now we must already know the outcome in both cases: it's a[l] - dp[l + 1][r] and a[r - 1] - dp[l][r - 1]. As the first player tries to maximize the difference, he'd choose the maximum of these two numbers, so the formula is dp[l][r] = max(a[l] - dp[l + 1][r], a[r - 1] - dp[l][r - 1]).

Here the subtask explanation works perfectly. And I won't lie, any DP problem can be solved using this terms. But your efficiency can grow highly if you learn this other approach that I will call process simulation. So what does it mean?

The Process Simulation approach

This approach is mostly used in problems that ask you to either "count the number of some objects" or to "find the best object of such type". The idea is to come up with some step-by-step process that builds such an object. Every path of this process (i.e. the sequence of steps) shall correspond to a single object. Let's have a look at an example.

118D - Caesar's Legions. After the formalization the problem will look like this:

Count the number of sequences of 0's and 1's where there are exactly n0 0's and exactly n1 1's and there can't be more than k0 0's in a row or more than k1 1's in a row.

Problems involving sequences are the obvious candidates for process simulation. In such problems the process is most usually just "Write out the sequence from left to right", one new element at each step. Here we have two possible steps: write down 0 or 1 to the right of what's already written. However, we need to allow only valid sequences, so we have to prohibit some of the steps. This is where we introduce the parameters of the process. This parameters must:

Determine which steps are valid at this point.

Be re-countable after a step is made.

Separate valid finishing states from the invalid ones.

In this case we have such rules:

No more than

n00's in total.No more than

n11's in total.No more than

k00's in a row.No more than

k11's in a row.

So we have to know such parameters of the process:

How many 0's are written out already.

How many 1's are written out already.

What is the last written character.

How many such characters in a row are written out at the end right now.

If we know what those parameters are, we have all the information we need to determine if we can write out another 1 and another 0, and to re-count the parameters after making one of the steps. When using this approach, what we will store in the dp array is just some information about the paths of the process that lead into such state (where by state I also mean a set of values for each parameter). If we want to count the number of ways to fulfill the process, this information will be just the number of such paths. If we want to find some 'best' way to fulfill the process, we will store some information about the best path among such that lead to this particular state, f.i. its price or its last step, if we want to recover the best answer later.

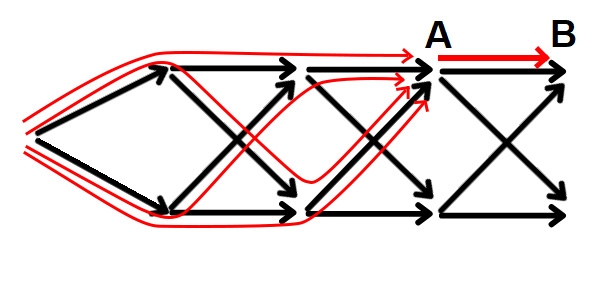

And here is the main trick: every transition in a DP graph is a step of the process at some state. When implementing the transition from state A to state B you just need to handle all the new paths you've discovered by continuing the paths leading to the state A with a step that leads them to B. These are not all the paths that end in B, but the idea is that you have to discover them all by the time you start implementing the transitions from B. If what we store in dp is the number of such paths, then implementing the transition is just adding dp[A] to dp[B], that is add all the new-discovered paths that get to B via A to the overall number of paths that end in B.

Implementation

If you've heard about the forward-style DP and backward-style DP, you may have started to suspect that this is the same classification that I am describing here. However, I consider that forward-style DP and backward-style DP refer to implementation. The backward-style implementation is: "Fix the state that is not counted yet, implement the transitions leading into it"; where the forward-style is: "Fix the state that is already counted, implement the transitions starting from it".

In fact, after you've decided on the DP graph, it doesn't matter how you will implement the transitions. You can even have your own way of doing it, if you follow a simple rule: don't implement a transition from state A before a transition into state A. But still, the forward-style approach is much more suitable and meaningful for process simulation, just as the backward-style approach is for regular DP, so in 90% of the cases this classifications will coincide.

Let's get back to the problem and implement the solution. For that we need to determine what is the starting state of our process. Let's denote by dp[i][j][t][k] the state, where there are i 0s already, j 1s already, the last symbol is t and it was written k times in a row at the end. Obviously we start with i = 0 and j = 0, but what are the t and k? Technically speaking, if we start from an empty sequence, there is no such thing as "the last symbol". So let's just modify the description of our process and say that when we start, the last symbol is considered to be 0, but it's written out 0 times in a row. So the starting state is dp[0][0][0][0], and we can only start in one way, so dp[0][0][0][0] shall be equal to 1.

The rest is very easy and intuitive:

Obviously, the time complexity will equal the number of transitions in the DP graph. In that case it's O(n0 * n1 * (k0 + k1)).

One more example

543A - Writing Code There are n programmers that need to write m lines of code and make no more than b mistakes. i-th programmer makes a[i] mistakes per line. How many ways are there to do it? (Two ways are different if the number of lines that some programmer writes differs).

Let's define a process that builds such an object (a valid distribution of m lines of code among n programmers). In the problem's statement this object was represented as a sequence of numbers v[1], v[2], ..., v[n] (with v[i] being the number of lines written by the i-th programmer), as if they hint us that this is just another sequence-building problem. Let's try to define the process's step as "writing out another element of the sequence", from left to right.

In order for the sequence to be valid, these rules must hold:

The length of the sequence is

n.The sum of the elements equals

m.The sum of

a[i] * v[i]for the elements is no more thanb.

Thus, we need to know such parameters:

The amount of elements that have been written out already (the number of programmer fixed).

The sum of the elements that have been written out already (the number of lines of code written).

The sum of

a[i] * v[i]for the elements that have been written out already (the number of mistakes made).

The number of the states for such a process will be around n * m * b. But from every state there will be around m / 2 transitions on average, that is: "write out 0", "write out 1", "write out 2", ..., "write out m - s (where s is the current sum)". So the overall number of transitions will be O(n * m^2 * b), which will not pass the time limit. This teaches us that sometimes we shall not use a building process that was described in the statement, but to think outside the box.

Let's come up with some other process. Maybe instead of going through programmers, try going through lines? That is, for every line define which programmer will write this line. In order not to count the same distribution twice, let's say that first programmer writes first v[1] lines, the second one writes v[2] next lines, etc. So if a line is written by a programmer i, the next line can be written by i-th, i + 1-th, ..., n-th programmer. Then there must be such parameters:

The amount of lines that were already written.

Which programmer wrote the last line.

How many mistakes were already made.

And again we have the same problem: there are around n * m * b states, and around n / 2 transitions out of every state on average; that is: "next line is written by the same programmer i", "next line is written by the programmer i + 1", ..., "next line is written by the programmer n". So the time complexity will be O(n^2 * m * b), which is again too long.

If we think for some time, we can see that we clearly need all of these three parameters: something about the number of programmer, something about the number of lines and something about the number of mistakes. So in order to pass the time limit we need to decrease the number of transitions out of each state, make it O(1). We clearly need at least two transitions: one to increase the lines-parameter and one to increase the programmers-parameter. If we think about it, we can come up with such a process: "In the beginning programmer 1 is sitting at the desk. At each step either the current programmer lines another line of code, or the current programmer leaves the desk and the next one sits down instead of him". The parameters are:

Which programmer sits at the desk.

The amount of lines written.

The amount of mistakes made.

The starting state is: first programmer sits at the desk, 0 lines are written, 0 mistakes are made. The amount of transitions is finally O(n * m * b). In order to decrease the memory consumption, I use the alleged parameter trick (which means I only store the dp table for the current value of the first parameter, forgetting the previous layers). Implementation:

Knapsack

AtCoder DP Contest: Problem D There are n objects, i-th one has a weight of w[i] and a value of v[i]. What is the maximal sum of values among all sets of objects, where the sum of weights is no more than m?

The process's step is just: "Look at the next object and either ignore it or take it". The parameters are:

How many objects we've already looked at.

The sum of weights of the taken objects.

Again, the first parameter will be alleged, so we will only store one layer of the dp table corresponding to the fixed value of the first parameter. The value of dp is the maximal sum of values of the taken objects among all processes' paths that end up in that state. Implementation:

Conclusion

There are a lot of other examples, where this approach significantly simplifies searching a solution, but I can't list them all here. If you enjoyed this post, please leave a like :)

If you want to know more, I remind that I do private lessons on competitive programming, the price is $30/h. Contact me on Telegram, Discord: rembocoder#3782, or in CF private messages.

thank you sir very educative $$$\,$$$ $$$\,$$$ $$$\,$$$ !

Thankyou sir

"But if we count the dp value for all intervals in the order of increasing length, then by now we must already know the outcome in both cases: it's a[l] — dp[l + 1][r] and a[r — 1] — dp[l][r — 1]. As the first player tries to maximize the difference, he'd choose the maximum of these two numbers, so the formula is dp[l][r] = max(a[l] — dp[l + 1][r], a[r — 1] — dp[l][r — 1])."

Sir I have doubt why it is a[r-1]-dp[l][r-1] here and not a[r]-dp[l][r-1].

Because I use semi-intervals, r is an exclusive border, the last value included is a[r-1].