I was reading mango_lassi's blog and in one of the paper referred in his blog, I found a $$$O(N^2)$$$ algorithm for constructing a longest k-increasing subsequence (a subsequence of maximum length which is a union of k increasing subsequences). While implementing it, ko_osaga told me about the problem E in "AMPPZ-2015 MIPT-2015 ACM-ICPC Workshop Round 1" (id #6275 in opentrain) where the problem asks to construct such subsequence with constraint $$$N \le 2 \cdot 10^5$$$ and $$$K \le 20$$$.

The official solution runs in $$$O(N \cdot K \cdot (K + \log(N)))$$$. The first half seems to be doing the RSK mapping, but I couldn't figure out why the second half works. It seems to be unrolling the mapping from the back, and maintaining $$$K$$$ intervals for each row in the tableau. And the editorial only explains the first half. Can someone please explain why it works?

Hi IntoTheNight,

I am bad at coding but I learned some algebraic combinatorics. I tried to figure out the relationship between the code and the paper you mentioned. Not completely understood, but hope the following is helpful.

Firstly, this comment pointed out that computing the first k rows of the RSK tableau takes $$$O(NK\log(N))$$$. So I guess $$$O(N \cdot K \cdot K)$$$ is the time complexity of "unrolling the mapping" and constructing the output. But I didn't really analyze the time costs, so it could be wrong. (edited: the code explains the time complexity, which is

// maksymalna dlugosc jest liczona w czasie O(nklogn), // pdciagi sa odtwarzane w czasie O(nk(k+logn)).Unfortunately, I failed to understand them (with the help of online translators)...I assume you already knew everything in that paper. But it's good to cover some basic knowledge here.

===basic knowledge starts here===

let $$$w$$$ be an arbitrary permutation of length $$$n$$$. $$$RSK(w)=(P,Q)$$$ be the pair of Young tableaux (YTs). (It's (S,T) in the original paper, but (P,Q) is widely used nowadays.)

The RSK algorithm begin with $$$P_0$$$ and $$$Q_0$$$ being $$$\emptyset$$$. And let $$$P_r$$$ and $$$Q_r$$$ be the resulting YTs after inserting $$$w_r$$$. $$$P_{r+1} = P_r \leftarrow w_{r+1}$$$, where $$$\leftarrow$$$ means 'insertion'. The insertion path is the set of positions where an element is bumped or inserted at the end of a row.

Let $$$\text{reading}(P)$$$ be the concatenation "row(k) + row(k-1) + ... + row(1)" if $$$P$$$ has $$$k$$$ rows. This is exactly $$$\tilde{w}$$$, the canonical form of $$$w$$$ mentioned in the paper.

The Knuth transformation (mentioned in Thm 2.3) is $$$yxz\leftrightarrow yzx$$$ or $$$xzy\leftrightarrow zxy$$$ when $$$x,y,z$$$ are three adjacent elements in the permutation and $$$x<y<z$$$. If $$$w$$$ can be transformed into $$$w^\prime$$$ by a sequence of Knuth transformations, $$$w$$$ and $$$w^\prime$$$ are Knuth equivalent. For simplicity, we write $$$w\sim w^\prime$$$. (There should be a 'K' over the '~', but I am too lazy to put it.)

The remaining part of the paper says: 1) If $$$w\sim w^\prime$$$, $$$P=P^\prime$$$; 2) If $$$w\sim w^\prime$$$, the disjoint union of $$$k$$$ longest subsequences of $$$w$$$ can be obtained from $$$w^\prime$$$'s via rules on page 9 and 10; 3) $$$w\sim \text{reading}(P)$$$. These are used in the second part of the problem.

Assume $$$P$$$ is the resulting YT obtained by $$$RSK$$$ for $$$w$$$. A lemma says $$$w\sim\text{reading}(P)$$$. This can be proven by induction. Assume $$$w_1...w_r \sim \text{reading}(P)$$$. Then we only need to show that $$$w_1...w_r w_{r+1}\sim \text{reading}(P_r) w_{r+1}\sim \text{reading}(P_r\leftarrow w_{r+1})$$$. I'm not going to write full proof here. But the induction will help us understand how the "unrolling the mapping" works.

===basic knowledge ends here===

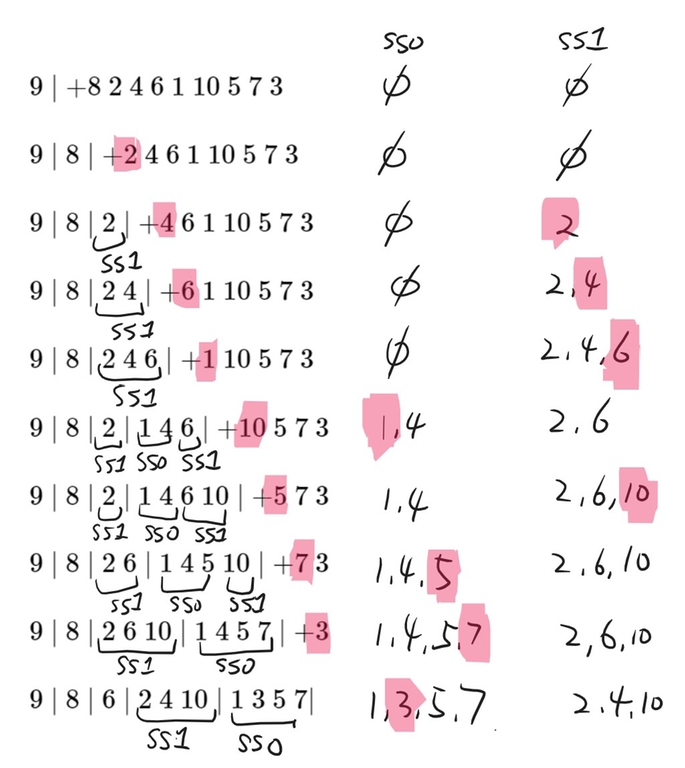

Let's use $$$w=9\ 8\ 2\ 4\ 6\ 1\ 10\ 5\ 7\ 3,\ n=10,\ k=2$$$ for example. For each insertion step, $$$\text{reading}(P_r) + w_{r+1} ... w_n$$$ looks like: (A Schensted algorithm demo can be found here. It requires old IE mode and (maybe) old Java as well.)

$$$\mid$$$ simply means the end of a row.

$$$9\mid +8\ 2\ 4\ 6\ 1\ 10\ 5\ 7\ 3$$$

$$$9\mid 8 \mid + 2\ 4\ 6\ 1\ 10\ 5\ 7\ 3$$$

$$$9\mid 8 \mid 2 \mid + 4\ 6\ 1\ 10\ 5\ 7\ 3$$$

$$$9\mid 8 \mid 2\ 4 \mid + 6\ 1\ 10\ 5\ 7\ 3$$$

$$$9\mid 8 \mid 2\ 4\ 6\mid +1\ 10\ 5\ 7\ 3$$$

$$$9\mid 8 \mid 2 \mid 1\ 4\ 6\mid + 10\ 5\ 7\ 3$$$

$$$9\mid 8 \mid 2 \mid 1\ 4\ 6\ 10\mid + 5\ 7\ 3$$$

$$$9\mid 8 \mid 2\ 6 \mid 1\ 4\ 5\ 10\mid + 7\ 3$$$

$$$9\mid 8 \mid 2\ 6\ 10 \mid 1\ 4\ 5\ 7\mid +3$$$

$$$9\mid 8 \mid 6 \mid 2\ 4\ 10 \mid 1\ 3\ 5\ 7\mid$$$

The second part of the code starts with $$$w_n$$$. At each step, it undos the last insertion operation by recovering the insertion path (all cut off at row $$$k+1$$$, recorded by

pos, val), and keep track of the changes of $$$k$$$ longest increasing subsequences we are interested in. Note that we only care about $$$k$$$ increasing subsequences, so some parts of the code deal with the "cut-off". Honestly speaking, I never carefully looked into them, so my understanding could be incorrect.To undo the insertion,

pos=lower_bound(s[j].begin(),s[j].end(),val)...is used to find the position of the element in row $$$j$$$ that bumps another element (val) into row $$$j+1$$$. It works since $$$P(j, pos)$$$ andvalshould both keep the row strictly increasing at the position $$$(j, pos)$$$. If at the beginning,valthe last affected element of the insertion step is placed at the end of a row,s[j].pop_back()removes it andval"bumps reversely" the element that bumps it during the insertion.For the $$$j$$$-th subsequence, called $$$\text{ss}j$$$ for short,

from[i][j]andto[i][j]describes where $$$\text{ss}j$$$ starts and ends in row $$$i$$$.In the above example, the two subsequences (

ss0, ss1) change in the following way:The answer ($$$\text{ss}0=1\ 5\ 7$$$, $$$\text{ss}1=2\ 4\ 6\ 10$$$ is obtained by following:

For each $$$i$$$, we may take the insertion as a sequence of Knuth transformations. How the subsequences change follows the rules mentioned on page 9 and 10. If inserting $$$w_i$$$ doesn't change the length of every subsequence (for example, inserting the last element $$$3$$$), analog to rule (1), (2) and (3) when $$$y\not\in \gamma$$$, or $$$w_i$$$ is considered "uninteresting" (below row $$$k$$$, such as $$$8, 9$$$), $$$w_i$$$ won't be part of the answer. Otherwise, the subsequence that $$$w_i$$$ goes to (see areas highlighted in red), labeled by

subsequencein the code, is exactly the final subsequences it belongs to.We may look at the insertion of $$$1$$$ ($$$w_6$$$) to understand why. Note that the "undo" transformation goes from bottom to up. So at the beginning we have $$$\text{ss}0=1\ 4, \text{ss}1=2\ 6$$$, and the canonical form $$$9\mid 8 \mid 2 \mid 1\ 4\ 6\mid $$$. The first Knuth transformation swaps $$$1$$$ and $$$4$$$, which is the "Type 1", $$$y\in \gamma$$$ case. So we make the modification $$$\text{ss}0\leftarrow (\text{ss}0-{1})\cup {2} = 2\ 4, \text{ss}1\leftarrow (\text{ss}1-{2})\cup {1}=1\ 6$$$. The next step is to swap $$$1$$$ and $$$6$$$, "Type 1"+$$$y\in \gamma$$$ as well. Set $$$\text{ss}1\leftarrow (\text{ss}1-{1})\cup {2\ 4}=2\ 4\ 6$$$, $$$\text{ss}0 \leftarrow (\text{ss}0 - {2\ 4}) \cup {1} = 1$$$. $$$1$$$ is dropped since $$$1$$$ is what we insert here. So finally $$$\text{ss}0 = \emptyset, \text{ss}1=2\ 4\ 6$$$.

There should be a formal proof, but I don't want to figure it out...

We need to show the following conjectures are true:

1) The undo operation is equivalent (or isomorphic) to a sequence of Knuth transformations (defined below) starting at the last affected element of the insertion operation in the canonical form.

2) If $$$x$$$ has the label (defined on page 8, construction of subsequences and partitions) $$$y$$$ ($$$x$$$ is inserted into row $$$1$$$, column $$$i$$$ when $$$y$$$ occupies row $$$1$$$, column $$$i-1$$$), $$$x$$$ and $$$y$$$ are assigned to the same subsequence in the code.

Assume we want to undo the insertion $$$a_1, a_2, ..., a_m$$$, $$$a_1$$$ is some element in $$$w$$$ and all $$$a_i$$$s for $$$i>1$$$ are bumped entries. {$$$a_i$$$} is strictly increasing. We firstly focus on the case without "cut-off", namely the whole tableau is monitored. Row $$$m$$$ is the last row of the insertion path and the row with the number of entries changed by insertion.

Denote the current $$$P$$$ tableau by $$$P$$$. We may assume the column index for $$$a_i$$$ is $$$c_i$$$.

The canonical form $$$\text{reading}(P)$$$ looks like

$$$...\mid ... a_m \mid .... a_{m-1} \mid ... \mid ... a_1 ...$$$

$$$a_m$$$ must be the last entry of row $$$m$$$ by definition. And in fact, {$$$c_i$$$} is weakly decreasing.

One fact is that $$$\text{reading}(P)$$$ is a concatenation of several strictly increasing substrings (entries of the same row written from left to right), satisfying that the first elements of all substrings are strictly decreasing (by definition of standard YT).

Define an algorithm $$$A$$$ being a sequence of Knuth transformations that start at the position of $$$a_m$$$ in $$$\text{reading}(P)$$$, and goes from left to right: 1) If Knuth transformation can be applied onto the current element and the following two elements (if exist), do the Knuth transformation; 2) Move the cursor to the next element, goto 1) unless reaches the end of the sequence.

Let's start with $$$a_m$$$. Obviously $$$P_{m-1, 1}$$$, the first entry of row $$$m-1$$$, which is right after $$$a_m$$$ in $$$\text{reading}(P)$$$, is smaller than $$$a_m$$$ unless it doesn't exist. If it doesn't exist, we only have one entry in the tableau, which is trivial. Generally, by definition, $$$P_{m-1, c_{m-1} +1}$$$, the entry right after $$$a_{m-1}$$$ is larger than $$$a_m$$$. Then we will do type-4 Knuth transformation until it becomes $$$...P_{m-1, 1}, P_{m-1, 2}, ..., a_m, a_{m-1}, P_{m-1, c_{m-1}}$$$. And several type-1 transformations follow until $$$a_{m-1}$$$ reaches the end of row $$$m-1$$$. Then we treat $$$a_{m-1}$$$ and following elements similarly until $$$a_1$$$ is swapped to the end of the whole sequence. (Lots of corner cases are undiscussed, but I believe they don't lead to any violation.)

We can then prove that (no proof here) Conj (1) is true, and if any entry $$$x$$$ has label $$$y$$$, their "subsequence assignments" after such transformations are guaranteed to be the same once they are swapped to the first row. Before the first undo operation, $$$a_1$$$ has no label (inserted in the first column) or belongs to the same subsequence

ss0as the element before it. So Conj (2) should be true.The code might not be as complicated as I think. Maybe it just uses the construction method from the paper. However, I still have no idea why the code tries to find an adjacent subsequence block

subsequence2...Upvoted for the effort

The code is mine, but don't remember the details anymore :( however I would very much like to understand if there is a subquadratic algorithm (for large values of K)!