TLDR: Link

Star the github repo if this was helpful :)

Have you ever been stuck on a problem and just wanted a small hint, but the editorial revealed the whole solution? Has an editorial you read every missed a small detail that made understanding it a lot more difficult?

I've developed a chatbot that can help with explaining CP editorials and providing hints to problems. The aim of this bot is to help you practice/understand faster and more efficiently, and it has in fact helped me with (more than) a couple problems. It works by using the power of AI, scraping, and prompt-tuning to understand and then explain CP problems in a way that's simple to use yet very powerful.

(Not so) recently, I asked the community for testers. A lot of their feedback has been integrated, but there were many feature requests that I don't have the capacity to implement. If you're interested in contributing features to this open-source project, submit a pull request on GitHub or HuggingFace (latter preferred).

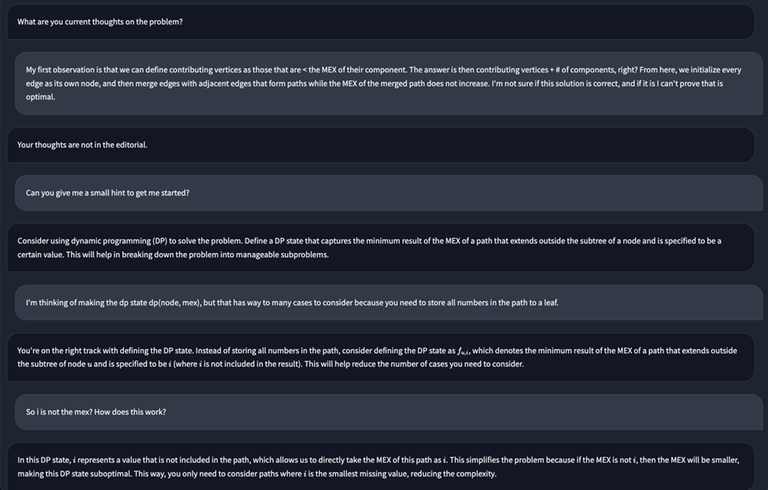

Short Example:

I hope this bot is helpful.

How good is it? I mean, is it really helpful when it comes to non-trivial problems?

Great idea btw!

Thanks!

Hm, thats a great question. The main concern would probably be hallucination/false outputs, which has been limited through the prompts (but this also limits the LLMs creativity).

From my experience with testers the helpfulness is more a function of editorial quality than problem difficulty, because at some point the LLM is not powerful enough to understand anything from the problem statement and only gets information from the editorial. This (probably) isn't true for easier problems but I personally haven't tried it.