Hello, Codeforces!

I'm excited to invite you to participate in MaraTON Challenge 1, our first marathon in collaboration with the TON Foundation. It's great to see our partnership with TON thriving and moving forward.

The challenge is brought to you by TON blockchain engineers, some of whom are community members. Special thanks to SpyCheese for preparing the problem!

The competition will run for three weeks, starting on Dec/23/2024 16:35 (Moscow time). Get ready to dive deep into the technical details of the blockchain world — it's worth it.

Winners will receive amazing prizes:

- 1st place: 8,000 USDT

- 2nd place: 5,000 USDT

- 3rd place: 3,000 USDT

- 4th–10th places: 2,000 USDT each

- 11th–20th places: 1,000 USDT each

Additionally, there will be bonus prizes for the leaders throughout the competition. Starting December 25th and continuing daily until January 13th at 13:35 (UTC), we will award bonus prizes to the top five leaders of the day:

- 1st place: 250 USDT

- 2nd place: 125 USDT

- 3rd place: 75 USDT

- 4th–5th places: 50 USDT each

Please note that these prizes will be distributed after full testing of all submissions made by 13:35 (UTC) on the respective day. The results are published here: https://codeforces.net/r/maraton-1-leadership-awards

Join the competition — it's truly something new and exciting on Codeforces!

* All payments will be made to a TON wallet. All payouts (including those for daily leadership) will be issued after the final results are announced.

* In case of ties in the leaderboard, the participant who first achieved the score will rank higher for prize distribution.

* Please note that this is an individual competition. Collaboration, discussing ideas, or using shared code is prohibited. Please adhere to the rules regarding the use of third-party code.

UPD: Here is the link to the table with the current progress on rewards for daily leaders: https://codeforces.net/r/maraton-1-leadership-awards

sounds good)

except I must know the count of problems

will be rated???

nope

Exciting challenge ahead! Good luck to all participants. I hope the problems will be enjoyable and fun.

where is magic

gpt o3 will win. all top 20 prizes.

hahahahha

Number of problems and score distribution?

whats a marathon

long contest. In this case 21 days

Will it be rated?

In the future could be a interesting type of round, but rn noup

i want to win a tshirt

WHEN THE NIGHT CRAWLS

ALL THE MONEY ALL THE HYOOS AND THE ALCOHOL

Am I allowed to take part in other contests while being registered for this one?

yeahh u can

rated?

Woohoo, I liked the button part!

is there a CP contest or blockchain?

Bangali can relate the name of this contest LOL

Yeah, sure.

Although I might certainly never be close to winning one, can you consider issuing the prizes in some other stablecoin?

USDT is fine. Consider not living in a totalitarian shithole called "EU".

Ok

Accurate name and title

What kind of problems can we expect to see here? Any examples??

how to reach tourist?

by getting to pupil first

get the joke man

Why are the details of this contest secret?

Do we have to submit the problems daily or can we also enter the contest a few hours after it begins on the first day?

would it be rated ?

Please someone answer

From other comments apparently no

Do we need to learn some blockchain algorithms (like cryptography e.g.) in order to have good performance in the contest? (or only cp algorithms is enough ?)

Also, will there be pretest and final test like other ICPC challenges?

Will this round be accessible for a newbie?

will it be rated? can i participate in other contests while participating in this for 21 days?

Is this rated ??

maybe

Oh i see :-)

This contest have a system testing, then leaders' prizes are based on provisional standings?

It's C++, not funC

The answer is in the question..awa

Could you share some background about this contest, or at least what should I know? I would like to know if CPers are a good fit for this problem, or is this contest not for me?

I only have math sword and algorithm gun.

Is there any submission number limit for this contest?

Sorry, I mean, how many times can I submit at maximum during the contest.

"This set will consist of blocks from the TON mainnet that will be generated after the end of the contest." Kinda sus

I have found an easter egg in examples:) Thanks for 20 ton!

oh darn I should've thought of Caesar cipher originally when I decoded that b64 string a few hours ago LOL. Good for you though!

Bro i just realized what you talking about. I though all of the example base64 can only converted to BufferSlice, but base64 on lz4 example can be decoded to regular string, and when decode it using caesar cipher algorithm with shift 3, its become 24 random words. And its same length with TON Wallet recovery phrase.

But they were deposited in April! How is that?

Tshirt?

Rated or Unrated ?

Will there be only 1 task for 3 weeks?

what will be the answer for n=32; in problem (a) i think to slove this problem like that: 32---(8,8)-(2,2)-(2,2). my answer is 6

good

Merry Christmas!

is it beginner friendly?

NO.

Here is the link to the table with the current progress on rewards for daily leaders: https://codeforces.net/r/maraton-1-leadership-awards

21 days in total. Do you think that after a week, we will still get better and better results from the same people?

Anyone able to run solution locally on apple silicon?

I am getting "Undefined symbols for architecture arm64:"

Hi! This seems very interesting. I have two questions:

1- Is there any place where I can find some sort of reference for the td library they use in the example? It's C++, but it's their C++. It feels kind of Rusty. Maybe I'm just not familiar enough with actual production C++ code.

2- While reading about the serialization of the BoC (here) I didn't understand the step of, and I quote:

that's after the descriptors are calculated. I don't understand how it works and how it can be used in reverse.

Did someone encounter

clang++: error: linker command failed with exit code 1120 (use -v to see invocation)for some code that can run locally with gcc and clang? For local testing I am using the docker image.Is it rated ?????

Hey, could you please take a look a this PR: https://github.com/ton-blockchain/ton/pull/746

In short — codegen produces code like

cs.advance_ext(0x20003)to advance 2 refs and 3 bits. Howeveradvance_extimplementation treats them as 2 bits and 3 refs and therefore fails to advance for refs. The implementation is also not aligned withfetch_subslice_extandhave_extlogic.Why is this important:

unpackusesfetchwhich isfetch_to(get_size())TLB::get_sizeis set toget_size_by_skipget_size_by_skipcallsskipproduced by codegenskipcontains calls toCellSlice::advance_extunpackmethods are brokenYou can spot the issue by looking at

InMsgcodegen:Probably got something close:

I am quite sure, that std::terminate should not be called in any kind of parsing.

Got this with following code:

P.S. understood, why it doesn't work, but it still shouldn't be calling std::terminate

It would be useful to have build with debug symbols too!

Not cool:

And can't go into std_boc_deserialize std_boc_serialize. Why not?)

UPD. Found CellSlice in another .zip, but still, debug symbols are pretty useful!

Can you make it possible to send larger files like ~150 kb?

Will there be more maraTON contest like this, seem practical and exciting also.

When did the time for daily awards change from 12:35 to 13:35? I feel like an announcement should've been made.

agree.

agree.

Magic

Will the solutions become visible to everyone?

good job

What were the ideas achieving top 20 results?

I just used a bitstream based zip (lzma) + some evolutionary search for best order of cells achieving 36th.

Great contest, thanks to the organisers. I don't often do traditional competitive programming, and instead prefer solving tasks without definitive solution, like RAIC, codingame contests, or CTFs. This marathon fits into this category very well, and I enjoyed it a lot.

I went with splitting my approach into 2 parts — picking best compression algo and removing "structure" from the data.

For the first part lzma (7z) showed the best results. Initially I tried lz4 with higher compression level and zpaq. But that thing alone would land you about 30+ according to my estimates.

For the second part I spent some time understanding the block structure and writing custom cells parsers. Basically each of them can load original cell, store compressed cell, load compressed cell and compute original cell. This approach had some drawbacks — I found out that

advance_extisn't really working and then I realized that the library provided by organizers misses extern symbols on Windows — it was linking just fine on mac and linux, but failing on CF, which uses Windows. Both of these issues required time to debug.In the end I did the following optimizations:

Removing

extrafield fromHashmapAug— it can be recomputed based on the data. Unfortunately there are maps that cannot be optimized this way, as they're part of Merkle update, and therefore some cells are replaced with pruned branches.Embedding refs with only one parent. In BoC all cells are stored as a list with up to 4 refs indices written at the end of the cell. Usually 2 bytes are required to specify cell index. However there are many cells with only one incoming reference (5k out of 7k in the first test block), so instead of writing an index of the cell we can embed the whole cell in place, saving these 2 bytes. We only need 4 bits to indicate what ref is embedded, and for that we can reuse some bits of cell header. This also improves compression rate — which was a good surprise.

Removing any extra information where possible. Some cells start with header tag, some contain hash that can be recomputed, some numbers are constant. This doesn't give a lot of points, but I did it where it was easy to do.

With additional time I'd probably focus on compressing Hashmaps (both regular and Aug), I had an idea of only storing leafs as an array to avoid having too many intermediate nodes. I didn't check if that would work, and whether this is something already handled on the compression side though.

Another interesting part of the competition was to fit all of this into the CF limit, so along with blocks compression I wrote a simple cpp minifier — kinda compressing code to compress blocks :)

Post-mortem, 19th place.

I am a frequent participant of different kinds of marathon contests, but this one was a new experience for me. Usually, the problem statement is relatively concise (i.e. fully understandable in a couple of hours), and here, we had effectively an unlimited research potential due to more and more nuanced specifications of the given data. This time, I have only written the contest for multiple days and haven't done any deep research into the problem, but on the good side, it makes my post-mortem accessible by the people who also had only a general look at the problem and applicable for a broader set of other problems. Having said that, I want to highlight that I just borderline achieved a price, and my solution is vastly inferior to the solutions of the very top participants, who, most likely, got a much deeper understanding of the problem. I look forward to their post-mortems.

The first (and somewhat strange) notion is that authors, in their solution, use a weak compressor, and there is another much stronger compressor called td::gzdecode. I considered something like that very probable because multiple participants got equal results early on, implying that there is a straightforward way to do so. It seems like a checker that participants are capable of reading source libraries, which is rather strange in my opinion but follows the vibe of this contest. I have experimented locally with other compressor libraries and couldn't improve the score meaningfully (+0.1% improvement at best). I assume the embedded compressor is almost frontier, simplifying matters — participants do not need to install any stand-alone general compressors independently.

The second idea came from experimenting with the data inside the cell. Most likely, this data has some deep meaning, but I experimented somewhat randomly and took what worked best. I will list what worked with minor motivation:

1) It is helpful to separate "special" cells from common ones. Special cells are generally very similar to each other in terms of bytes needed to store the information, so their second information byte can be compressed very well if grouped together.

2) It is helpful to group the first and second data bytes of each cell; they are probably restricted in terms of what they can store. Splitting the third or any further bytes does not help anymore. In the special cells, it is also helpful to group their last two bits.

3) A good idea is to reorder cells so that ones with similar data go next to each other so that the compression algorithm can use its' buffer to shorten the resulting string. There are probably smart ways to do so, but I just ordered cells based on the number of data bytes they have because this information is stored anyway. It gives a relatively substantial improvement. Also, for some reason, it is a bit better to start with the largest number of bytes.

The third set of ideas is related to how the graph structure is stored. As a big combinatorics fan, I find this part the most interesting. It is reasonable to store edges in the order of dfs; it is even advised in the white paper. The graph is almost a tree, and, in most cases, we don't need to store the edges explicitly. We can store the number of references from a given vertex, and if we visit an unused vertex, we give it the first unused number. By that, we only have to store the "back" edges, i.e., those that go to visited vertices. Another minor improvement comes from the idea that the back edges typically go to the vertices with close numbers, so storing the differences with the previous back edge is more efficient.

As a result of these optimizations, the number of bytes needed to store the general information about the cells and the graph is relatively negligible—even decreasing it to 0 would not put the solution anywhere close to the top-3. Therefore, I assume that further improvements come from a better understanding of what the data inside the cells actually means.

If you are interested in an exact implementation, here is the code of my final submission -- https://pastebin.com/wKs8MwWW

I am looking forward to the next TON contests and marathons in general.

First of all, huge thanks to the organisers and the contestants! I've really enjoyed the contest

Let me share some of my ideas with you:

Surprisingly enough, compression itself is not the main part of the contest. I might talk about it later, but the main part is separating different types of compressible data and incompressible data apart. And different tweaks and tricks as well of course

One of the definition of truly random data is the data you cannot compress. SHA-256 is a cryptographic hashing algorithm. It produces almost random output. And it's used for all the hashes inside TON cells. Let's store all the hashes separately from other data

How can we extract all the hashes? The right answer is "just parse the root cell according the scheme", since we know every field which could store a hash. But there are two complications. It's not that easy to parse some cells because information about the scheme might be lost (for example, inside Merkle Updates or some raw value cells). Also it will cost you a lot of source code, and we actually have to care about this resource. So instead I just called Block().print_ref and tried to parse output looking for any hashes

Some of the hashes actually might be derived from existing information. I'm talking about cell's hashes (for example prev_transaction_hash) and more importantly hashmap's keys. Let's extract values from these hashmaps (if possible) and store them separately. So we could transform original Block to Block with empty InMsg, OutMsg etc dictionaries, store these messages separately and serialize not only one root Block cell but new root Block cell + all extracted cells all together. During the uncompressing process, we should apply reverse transformation

As for cell's hashes stored in the data, we could replace them to a reference for specified cell. During the uncompressing process, we should run through all the cells in the reverse dfs order and replace all these references to original cell's hashes

Speaking about cell's refs. It's beneficial to store not absolute but relative index of them. Same thing applies to root indexes from bag-of-cells info. Surprisingly enough, I couldn't earn extra points by adaptive reducing number of bytes to store a reference based on it's value. Good compression algorithm takes care of it by itself

There is at least one more type of data which could be optimised out as long as it derived from the actual data. I'm talking about cell's sizes of the special cells. We could just drop these sizes and recreate them during the uncompressing process

If you ask me about my the most favorite optimisation, probably I'd chosen this one. I always thought about overhead for storing non full bytes at the end of a cell. But straightforward approach of storing numbers of bits fails. I came up with idea storing together end bits of equal size. If last byte of a cell has only 1 meaningful bit of information concatenate all such bits together in one bit stream. Do such thing for all other reminders (2, 3 up to 7). Surprisingly, it doesn't improve final compression at all! Then I noticed that we have significantly more cells with exactly 4 bits in the last byte of data. I thought that this was the sign, since it's very easy to combine 2 such 'ends' together to one single byte. And it worked actually very well

I think I have some other ideas, failed approaches and other some stuff to share. But the message is already really huge! Let me know, if you are interested in additional part of my ideas. And feel free to ask questions!

Amazing contest,thanks to the organizers! Here are few of my main ideas:

1.About compression algorithm,which is at least some kind of important since it basically determines what kind of optimization you should implement to your serializer.I tested a range of compression algorithms and finally chose PAQ9A (https://github.com/FS-make-simple/paq9a). PAQ is a suite of compression algorithms designed specifically for compressing text, the general idea being to fit the likely next bit of text through a predictor.Because of this, its very sensitive to the regularities of the text. This is the reason why our subsequent optimization focuses on making the raw text more ordered rather than shorter.

2.About serialization, I think the most basic optimization should be this: we reorder the nodes according to the bfs sequence, so that each so-called “new son” of each node can be represented as “its own number +k”, where k is a number not greater than four. We can use a 4-bit mask to describe whether each son satisfies this requirement. For the sons that fulfill the requirement, we can save 2 bytes that would have been used to put their numbers.

3.Another big optimization is related to exotic cells. After going through the source code, we can find the requirements that these exotic nodes need to fulfill. For example, the information size of all exotic nodes is fixed, the first and second types of exotic nodes must be in the form of hash+dep, the third and fourth types of information do not need to be stored and can be calculated during decompression, and so on.

4.This optimization still boosts tremendously, but I'm not sure why would this work. We stumbled upon the fact that having the information of ordinary cells stored in the form of 2 bits per byte instead of 8 bits reduces the optimization rate significantly. We suspect this has something to do with the predictive friendliness of PAQ9A. The rest of the sequence does not have this feature.

5.This actually consists of a large number of “small optimizations”. We find that switching the order of information is beneficial for the predictor to better predict the context. For example, we store refs information separately from info information, and we can also store “regular” information, such as depth in information, separately from “irregular” information, such as hashes. In addition, since we have already put the info_size in advance when storing the info, we can sort the info according to the info_size before storing.

6.Some optimization of the tuning engagement details is also necessary and is worth about 1000 points.

7.The last point may be some kind of heuristic. In our early tests of compression algorithms, we found that “feeding” an input so that it learns certain patterns beforehand improves the compression performance on other data, which I call “warming up”. This was not reflected in my final program, as I had a commitment on the last day of the race, and it didn't seem to be enough to make up the difference between me and third place. I had initially envisioned manually generating boCs to “warm up” more data, but randomly generated boCs didn't seem to improve, which may be related to some of the patterns in how the blockchain works in practice.

4-th place, let me write something.

It took me 2 days to find a better compression program than gzip. I tried several programs, like lzma had too much source code, and others bad performance. I tried the paq series and found 9a the most optimial one. Suprisingly there are other contestants (2+) used that. The submissions that used >1s on each case might be also using this I think.

Juring the first days I was optimizing the paq9a algorithm, and did some easy changes on the raw serialized boc.

Then I spend days understanding how all cells are stored, and wrote my own serialization based on mode=0 serialization.

It includes:

seperate graph and contents. This needs adjustment to get more scores.

let ref_i become ref_i-i

sort the refs of cells with 2refs.

store first 8 bits of normal cells with the graph.

paq9a is a algorithm based on prediction. A 0~255 char wont perform well. it should be turned into 4 (0~3) chars. 8 bits wont do better (worse).

Then I tried to understand the graph before mode=1 (mode=31).

It is index-ed similar to bfs order, based on hashes.

with this, the refs trick preform better if we change ref_i-i to max_index-ref_i.

some parts should be stored at 0~255 chars instead of 0~3. To seperate different parts of the boc, when turning the 0~3 to 0~255 char (like 000000xx) we can modify the 6th bit to like 000001xx and other part 000010xx.

exotic cells content are no worth investigating. The main worth of them is their type. Ther type decided their number of sons, and length of contents.

this turned out better because the graph is more understandable and I can do more optimizations.

sort contents with their length.

On 10th day I was on rank 4 with many points ahead from 6th. I prefered to do some other things, so I just quit and end out reaching the 8th place.

Thanks for the great challenge! I'm in 12th place, and here are the tricks I used in the solution:

Use a better compression algorithm than LZ4. (I used td::gzencode/decode, which is part of ton_crypto_lib). I experimented a bit with zstd, but didn't get any improvement and decided not to invest in this part of the solution.

Store sha256-like data (e.g. in pruned cells) separately from others because of their incompressibility.

Store the first two bytes of each cell separately.

Some cell data is sometimes equal to the hash (level = 0) of another cell. During compression, I replace such data with a cell reference.

Store the graph as an array of delta-encoded + byte-reordered refs to children.

Use some heuristics to reorder the cells (DFS in/out order, move special cells to the end, move cells with the longest repeating substring to the beginning). My solution tries different combinations and chooses the best one.

On day 20 of the competition, I downloaded and analyzed the last 10,000 blocks, found the 200 most common sha256-like byte sequences, saved them in the source file, and used them in step 4 as well. This trick got me a few points during the pretests and i suspect many more points in the final tests. I was 16th before the final tests, and 12th after.

I also noticed that each block contains one MerkleUpdate cell, which can be replaced with an empty cell and restored during decoding. But this only gives a few points.

Lots of fun in this contest, still had trouble improving past 6th even with a good quality compressor. I think there was more needed regarding the patterns in the data.

Compressor: 12 input and 5 mixers neural net (context mixing) with brute force optimized parameters and models. For data processing, cells are sorted reverse size. Good model contexts include: looking at the previous cell's byte in the same column, having special context for first few and last few bytes, the first byte in the cell, and the column, column % 3, column / 4, etc..

References are compressed with the two cell header bytes but separately from cell data. Also the cell reference difference is encoded instead of the absolute value to provide a solid gain.

There is a warmup file which is just one of the 25 sample files embedded as base64 in the source to warm up the neural networks.

Unimplemented ideas:

Actually model TLB fields for data within cells (i.e. all use_dest_bits have similar values and should share probability context), too much code to implement.

Deduplicate SHA hashes in data by searching bytes of all cells (small improvement) and referencing the with the index cell that has a matching hash, same for CRC hashes.

More experimentation with ordering of the cells.

Other comments mentioned using 2 bits contexts, maybe this would have helped too.

Hi!

I had very limited time, so my approach was simple: bit-wise arithmetic coding using probability than current bit is 1, p1. For estimation of p1 I used bit contexts of lengths L=2,5,8,11,..,26: ctx02,...,ctx26. For each possible context there were bit statistics of encountered "1" and "0" bits, counters c1, c0. Statistics were mixed like this:

c1_tot=c1[ctx02]*w02+c1[ctx02]*w05+c1[08]*w08+...

c0_tot=c0[ctx02]*w02+c0[ctx02]*w05+c0[08]*w08+...

And final probability is p1=(c1_tot+1)/(c1_tot+c0_tot+2). Weights depend only on length of context, the longer, the higher confidence of prediction, and weight should be higher. Good estimation is to make them proportional to square of length of context, e.g. 1,4,9,16. I slightly tuned weights by hand to improve compression.

After encoding of current bit with p1 we update statistics for all lengths of context. Decoder must use the same p1 for each decoded bit, it can be done using the same statistics gathered the same way on the fly on both encoder and decoder side. It works surprisingly well for such simple method.

Longer max context length can give better compression, but it's slower and requires more memory. To keep the statistics I used simple table, as the longest context was 26 bits, it comfortably fits within memory limit. Of course longer ones are possible to keep too, e.g. using hash tables.

Context was forced to fixed value at the beginning of each cell to localize statistics for two header bytes. Value can be anything as long as it's unlikely to be found in body of cell. Also I did trivial preprocessing, for each cells I subtracted index of current cell from all its reference indexes. It leads to smaller positive values, more zeroes in bitstream, better compression and is trivially reversible. At the start of reference indexes context was forced to different fixed value, again to localize statistics for reference indexes values.

Also to save memory and maintain high speed I used approach similar to PAQ compressors: one byte state represents statistics and can be updated with table lookup. Each byte corresponds to p1,p0 pair. We can represent it in one byte with reasonably well coverage of possible p1/p0 ratios as p1 and p0 cannot be high simultaneously as we can reduce them to maintain similar ratio, but it's another story.

Of course it all can be improved using information about cells structure to make better predictions.

An awesome contest! Thanks to the organisers!

General thoughts

Risking to state the obvious, but I think hosting more marathon-style competitions could be a great way for codeforces to grow the audience and improve retention. I haven't done programming competitions since 2019 and hardly even programmed first hand in the last several years. Still, I've decided to participate. Also, long contests are less demanding for staying sharp at implementing more or less basic things super quickly and give enough room to find free time for experiments.

I was actually pleasantly surprised to end up in a prize spot (although, only barely). I didn't even get to meaningfully parsing cells data or using any more sophisticated compression algorithms than lz*. Also, on the second to last day I somehow didn't realise that even 20-th place get a prize and simply went to sleep instead of optimising the solution further :)

Background

I'm not proficient in either heuristic contests, compression or blockchain, so I've started from TON documentation and reading about core principles behind archiving. It turned out there are a few ways to look at data compression. For instance, one can focus on finding "redundancies" in a given data or on estimating a probability model from which the data was "sampled". Both views were at the core of my final solution. As a side note, I found the idea of arithmetic coding with dynamic symbols probabilities to be very beautiful (although I didn't even implement it, lol).

Solution process

The first thing I noticed is that there is a bunch of bits in BoC serialisation which can just be omitted. For example, a entire block header with "number of roots" (which is always 1), etc, cell's level, subtree hashes stored in some special cells (they are only used for validation purposes during deserialisation).

Also, if edges always go backwards, one can calculate the minimal number of bits to store them and use exactly that number. However, interestingly, this didn't provide any boost after other optimisations described below.

Another thing came up when I was reading about LZ compression. Basically, it stores data as alternating pieces which are either "position in already decoded data, number of symbols to copy" or "string of symbols (called literal), number of symbols". In the description there was something along the lines "it's hard to find matching substrings so we use 64kB window and a hashmap mapping first 4 bytes to its last occurrence". Wait, what? What is the problem to just find the exact longest match? Having said that, I went ahead and implemented my own LZ compression based on suffix array and going through data with std::set. It gave a meaningful boost over the baseline solution at the time. Adjusting hyperparameters (e.g. minimal match length) also helped the score a bit.

Further, there was an intuition that similar data pieces are better to be closer to each other (e.g. a lot of bits were spent on encoding "match offset" and "match length"). It helped to realign the data and store d1's of all cells (i.e. number of refs and is_special), then all data sizes, then all refs and then all data.

After that, I threw per-byte huffman coding on top of the serialized and lz-ed data (huffman statistics were estimated from the sample test set and hardcoded into source code).

Also, I've built a "dictionary" of frequently appearing substrings in 25 sample tests and also hardcoded it into the source code. It further improved the score (the approach is to append this dictionary to the beginning of the data so lz can reference it naturally).

The above was the first milestone. After that, I got back to the documentation to actually learn something about the nature of data within cells. Shortly, there was another realisation (kind of obvious) — up to this point I've been working on bytes level instead of bits, while bitstrings inside cells are not aligned to 8 in any way!

So I switched to treating data as a bitstream and it actually improved the compression rate. But it turned out that suffix array construction was too slow now. Thankfully, there was a different approach: build suffix automaton of the reversed string and look at the suffix links. They form the suffix tree of the forward string. The average leaf depth was about 36 on sample data, so one can just take any suffix and iterate over its parent in this (implicit) suffix tree. I find this idea very cool, as it is not only ~20x faster and simpler in my implementation, but also allows to naturally skip all matches with a length less than a given threshold.

Another helpful idea was store lz match starting positions not as "offset to the current position", but as "offset to the current cell id" + "offset within a cell we have a match with". The intuition is that certain parts of cells should be matched more frequently than others.

At this point, there was not a lot of time left, so I did the following: calculated histograms of lz tokens ("literal length", "match length", "cell id offset of a match", "offset within the matching cell") over the sample data, hardcoded them to the source code and then used huffman coding during compression. Interestingly, a constant dictionary of frequently appearing bitstrings didn't help this time.

I enjoyed the process a lot, so even thinking about writing a more detailed blog about my experience when I have time :)

Again, thanks for the competition!

Forgot to add, I kept the code at github.

Made it public just now: https://github.com/PavelSavchenkov/MaraTON_Contest_Solution

I must start by saying a huge thank you to the organisers of this contest—it’s been nothing short of extraordinary. The challenge was not just about compression; it was a proper test of problem-solving, algorithmic ingenuity, and attention to detail. I thoroughly enjoyed every bit of the process, from the initial brainstorming to the fine-tuning of my approach.

The block graph structure was a fascinating starting point. Diving into it, I found myself analysing the block nodes, reordering them based on size to uncover optimisation opportunities. Using a combination of Suffix Arrays and Binary Indexed Trees to detect repeating patterns across nodes felt like working on a blend of theory and practicality. Identifying those patterns and cleverly replacing them with references felt immensely rewarding.

One of the standout challenges was handling exotic cells. With their unique structure, these fixed-length blocks opened up a brilliant opportunity for targeted compression. By manipulating the first 16 bits and zero-padding the remainder, I could reduce storage requirements. It was a great reminder of how small, deliberate changes at the bit level can lead to big gains in compression.

Preprocessing was another critical aspect of my solution. I reordered the data layout to maximise predictability and compression efficiency, storing metadata upfront, followed by data sizes, references, and the actual content. This alignment improved the results when running the compression algorithm.

The combination of Suffix Arrays and sliding windows proved highly effective for pattern matching, allowing me to replace overlapping substrings with compact references. Integrating these optimisations ensured minimal redundancy and improved overall compression.

I also learnt a lot from other participants' approaches. For instance, I wasn’t initially aware that gzip compression exists within the TON library, nor did I know about advanced methods like 9a, which turned out to be highly effective. I just tried with lzav(https://github.com/avaneev/lzav/blob/main/lzav.h) but it didn't improve a lot. I'm happy to see such diverse solutions for the same challenge.

I'm really new to this kind of contest but it provided an excellent opportunity to explore advanced techniques, from suffix-based pattern matching to bit-level optimisations, in a real-world compression task. The structured yet open-ended nature of the problem was both challenging and enjoyable.

Thanks again to the organisers for a top-notch technical challenge.

16th place solution (or ~3rd if not for RE on 11 final tests)

My approach could be roughly divided into 3 phases:

1. Removing some redundant information in

Blockstructurea) in

BlockExtrathere are fieldsin_msg_descrandout_msg_descr.in_msg_descrhas type(HashmapAugE 256 InMsg ImportFees), which basically means trie over bit representation of keys. In each node with several childs there is "sum" ofImportFeesover this node subtree. Keys in this trie are hashes of values (i.eInMsg). We can notice that there is some redundant information — namely keys (which can be reconstructed from values) and "sums" of subtrees (which can be recomputed during tree construction) — which can be omited during serialization to save space. So before compression I took this tries, extracted values from it and replaced trie with linked list of values. The same forout_msg_descrb) also in

BlockExtrathere isaccount_blocksfield. It's also trie, keys of it are account addresses and values areAccountBlocks, which basically are collections ofTransactions. From data I've noticed, that major part of theseAccountBlockconsist completely of transactions, which are stored inin_msg_descrandout_msg_descr. So I just removed these blocks and then recreated it during deserialization.c) In root

Blocktype there isstate_update:^(MERKLE_UPDATE ShardState)field. Its type basically means that it contains twoShardState, some of its parts are equal and are replaced with Prunned cells, others are not and are present as is.ShardStateis also some kind of trie withDepthBalanceInfo"sum" in each node. Some of these sums can also be reconstructed from information in children of node. So I detected such sums and replaced it with empty string and then recreated during deserialization.2. Replacing some data in cells "payloads" with links

a) many participants already mentioned that some cells contain hashes of other cells. I computed hashes of all cells and then tried to find it in other cells data. Each occurrence I've replaced with reference to cell the of which it was

b) Special case is

prev_trans_hashinTransactiontype. It's followed immediately byprev_trans_lt. If we can replaceprev_trans_hashwith reference to otherTransactioncell then we can recreateprev_trans_ltfrom it as well.c) I replaced not only hashes of other cells, but also account addresses (with reference ot the earliest transaction for this account)

3. Serializing cells tree and compression

a) I traversed tree of cells in dfs order and created

d1,d2andpayloadsarrays. Inpayloadsthere is duplicates, so I replaced each duplicate with link to its previous occurrence. To make links "smaller" let's group payloads by size and then indices of links would be "small" and can be encoded with fewer bytes.b) There are chunks of equal data in different cells. But, it might happen that they are not aligned to byte bounds. In other words on cell can contain substring xFFAA starting from 8th bit and the other can contain it starting from 13th bit. Gzip (which I used for compressing) operates on byte-level, so it won't detect such match. In order to somehow overcome this problem I did the following. Let's process groups of payloads of equal size in some order. For each group lets try different shifts from 0 to 7 bits. Shift each group, append it to already processed payloads and run LZ compressing on it (not GZip because it's like 10 times slower). Select shift which gives better compression. After it we have array

shiftsc) Empirically I've found that it's better to run Gzip two times — the first to compress

d1,d2,links to equal payloadsandshifts, the second to compresspayloadsand then just concatenate them.I want to thank organizers for such interesting task!

Also I have a suggestion. It'd be a bit better user experiense if during competition we can see not only results on preliminary tests, but also whether solution successfully finished on final tests or not. My solution worked successfully on both preliminary tests and 500 random blocks from TON blockchain. But given the complexity of data in

Blockstructure it seems that there was some corner cases, which was not present in this data and my solution crashed on 11 of final tests. I must have tested on more random blocks. But as the main goal of competion is better compression I feel a bit disappointed by this data correctness issue. Anyway, it was fun and I learned something new, thanks!Huge thanks to the organizers for an amazing contest!

Here were what I had done during the contest:

paq9a(a byte-wise neural network/context mixing compressor) andtangelo(bit-wise) are two of the strongest ones. Bit-wise compressor seems to work better on this kind of data.For the context mixing compressor, longest text matching model was the most important part. I also added some other contexts to increase prediction accuracy:

72xxxH1H2...yyyy,72xxxxH3H4..zzzzhave lots of repeating byte at the same index).x8orx0in hex value).I saw some other kinds of pattern when skimming the data cell but could not go further since I did not have much time to explore the detailed structure of BoC.

The tangelo 1.0 compressor has other models like DMC model, sparse model, indirect model but I needed to exclude them to comfortably fit the time limit of provisional data. Indeed, data in final test cases are shorter than in provisonal ones and my run time was less than 1.5s. Though, anyway, even if I had added these models, I would still stay far behind top 3 since seems removing redundant data, and re-ordering the rest is the most important part of this contest.

One final improvement that I used was to use pre-trained data. We can compress original data into a compressed text. When we decompress the text, the model loads all the weights. Then when we compress the original data again, it only costs a dozen of bytes since the model predict the next bit with near 100% accuracy. Not only that, it also learned important features from the context. For example, when I trained my model with test case 1-006.txt, scores of 1-009.txt, 1-017.txt, and 1-022.txt jumped from 129x to 133x since their contexts are highly relevant. Other test cases also have score increased by 2-5 points. In the end, I loaded pre-trained weights of two test cases 1-007.txt and 1-020.txt. Seems my score jumped from 1284 to 1296 thanks to the pre-trained data.

Ideas that I tried but failed:

I had almost no knowledge about data compression but after this contest, I learned lots of things. I particularly find Matt Mahoney's data compression document and Byron Knoll's thesis really interesting to read and easy to understand.

My code is publicly available here: https://github.com/lientv/maraton2024.

First and foremost, I'd like to thank the organizers for this incredible contest. It was a fantastic opportunity to explore complex data structures and develop novel optimization techniques. Below, I'll outline my approach in detail.

My algorithm was structured around a detailed analysis of the cells, splitting the data into special cells and general cells for targeted processing.

Exotic Cells

Through analysis, I identified that the first 8 bits of an exotic cell define its type, while the next 8 bits represent its level. Understanding the characteristics of each type allowed me to optimize them effectively.

Ordinary Cells

For ordinary cells, I implemented the following steps.

1. Sorting the data-length

Sorting the cells by data length revealed consistent patterns in prefixed and suffixed, particularly for cells of the same length.

2. Leveraging common subarrays

To capitalize on these patterns, I used LCP arrays to identify up to 7 maximum common subarrays recursively from the 15 most recent cells. These subarrays were encoded in the format: xth cell, yth position, length z.

This approach allowed me to efficiently represent the data as a combination of base data and compressed segments, significantly reducing storage requirements.

Some more tricks I used

In addition to the cell-specific optimizations, I applied several global techniques to further enhance compression.

Beyond individual cells, I applied LCP to the overall results to compress adjacent data by up to 8 bits. This yielded a 500pts improvement in local tests.

I built a Huffman tree using the transaction data to encode distributed variables efficiently. During compression, the static Huffman tree was used to encode these variables, providing an additional 800pts improvement in local tests. This step rendered LZ4 compression unnecessary, as no further gains were observed with LZ4. Ofc, I didn't try with gzip but I hope it will be the same as well.

This solution achieved strong results(10th) by focusing on the structural characteristics of the data and leveraging patterns for efficient compression. While there's always room for further refinement. I'm pleased with the insights gained and the techniques implemented.

Thank you again to the organizers for creating such a challenging and rewarding contest. I look forward to participating in future competitions and continuing to learn from these experiences!

This competition presented a unique opportunity to explore custom compression techniques tailored to the characteristics of TON blocks. My journey began with an in-depth study of serialization, starting at the root of the cell. From the outset, I noticed inefficiencies in the provided serialization mode 2. This realization marked the beginning of a path toward uncovering optimization opportunities.

Analyzing the provided

01 - Cells.cppfile proved instrumental in understanding the block's underlying structure. At its core, the block was represented as a Directed Acyclic Graph(DAG), where each vertex corresponded to a cell containing a value(hash data) and a type(exotic or general). Recognizing that the DAG structure didn't have to be serialized in its default form, I began exploring how to reconfigure it for better storage efficiency and customized it, :)Initially, the challenge of storing DAG information seemed daunting. This data-comprising connected cell numbers and their respective counts-accounted for roughly 15% of the total size. However, I observed that sorting the cells by data length revealed an intriguing pattern: the connection counts of adjacent cells in the sorted array were surprisingly continuous. This insight became the cornerstone of my approach to optimizing DAG storage.

To compress the connection data, I adopted a layered strategy, I applied RLE to the array of connection counts. This simple yet effective method leveraged the continuity discovered in the sorted data. Then I constructed a Huffman tree to encode the decision points, replacing verbose representations with compact codes.

Although the number of cells rarely exceeded 8000, storing the connection counts required 13-14 bits per entry(

14*8000*4). When multiplied across cells and connections, this quickly became a sizeable portion of the data and there was a room to use Huffman coding. Through this, I achieved significant savings, paving the way for further optimizations.With the DAG structure compressed, I turned my focus to the cells themselves. To simplify processing, I serialized all cell data into a single sorted sequence(hash data only). This arrangement revealed additional patterns, particularly in how common subarrays appeared across adjacent cells.

I designed a dynamic programming solution; The state variable,

dp[i], represented the minimum compression length up to the i-th location and which maximizedi - dp[i]independently. At each step, I updated the state using 3 strategies such as direct updating(copy the current data as-is), Maximum common subsequence(Identify and compress the longest matching subsequence within a fixed window[i - window_size, i)) and LCP(find the best LCP of latestprecntcells in lcp arrays).Through experimentation, I tuned the parameters to maximize efficiency for typical transaction histories, ultimately setting on a window size of 30 and LCP depth of 3. This method allowed me to compress the hash data efficiently, leveraging both local and global patterns in the dataset.

After completing the custom compression steps, I applied a final layer of improvement. The compressed data was subjected to LZ4, the method suggested in the problem. However, I found that my tailored approach had already eliminated most redundancies, rendering LZ4's impact neglible.

The contest highlighted the importance of understanding the data's structure and tailoring solutions to tis specific characteristics. The DAG's redundancies and the unique properties of TON cells required a departure from traditional compression techniques. By focusing on the block's intrinsic patterns, I developed a method that noy only compressed the data effectively but also deepened my understanding of specialized compression strategies. https://pastebin.com/65ZUg5ZL

Thank you to the ton foundation and this community for such a excellent contest. I look forward to applying these insights in future contests and projects.

3rd place.

Firstly, you need to notice that many cells are the same, so you can merge identical points, turning the graph into a DAG instead of a tree. Now we can consider the Bag of Cell as a DAG with n vertices and m edges.

I have a re-numbering algorithm: I perform a DFS on the entire graph and number the vertices after traversing all their successors. This way, I can easily store the graph by using $$$O(n + m log n)$$$ bits. In short, during dfs, use 1 bit to mark if the vertex reached has not been visited before, and store it's id if it has been visited, or store the number of references if it has not been visited.

Then, I record the length of the info of each vertex and info of all vertices.

And it's important to find a more efficient compression algorithm.

It's the plain algorithm of my idea, so we need to optimize it.

It's easy to find that the information density is already high enough, so the direction of optimization is to increase the compression ratio.

Separating different information can effectively improve compression ratio, so I divided the information into four parts: marks, id, length and info. We need to store whether each vertex is special or not, but let's not worry about this part for now.

Most compression algorithm compress by byte, so we can store information by byte. For example, We can use 1 byte to store a mark, 2 bytes to store a length and so on. For storing info, the highest compression rate occurs when storing 2 bits into one byte, but I don't know why.

Although I said the information density is already high enough, some information is redundant. For special vertices, some information does not need to be stored. Specifically, for four types of special nodes:

Additionally, it can be observed that for Prunned Branch, in most cases, its 8th to 15th bits are always 00000001, so we can use 1 bit to mark if its 00000001 or not. One more thing is Prunned Branch use 16 bit to store depth, but the max depth is 1024 (for Prunned Branch, the max depth is 1023, because the root is not Prunned Branch), so the first 6 bits can be omitted and the remaining 10 bits can be separated.

A mark only contains up to 4 bits of information, but use 1 byte to store it, so we can try to make use of the remaining memory.

Here, $$$b[i]$$$ represents the information from the i-th bit in a byte from high to low. My final solution is:

It's pretty strange. But the other three parts (id, length and depth) reduced by 1 byte for each individual piece of information.

Lastly, a very important point, part of the samples can be compressed/decompressed using compression algorithms in advance to improve the prediction success rate of the compression algorithm.

You can see my code in https://paste.ubuntu.com/p/H4Gj7sM4xP/ .

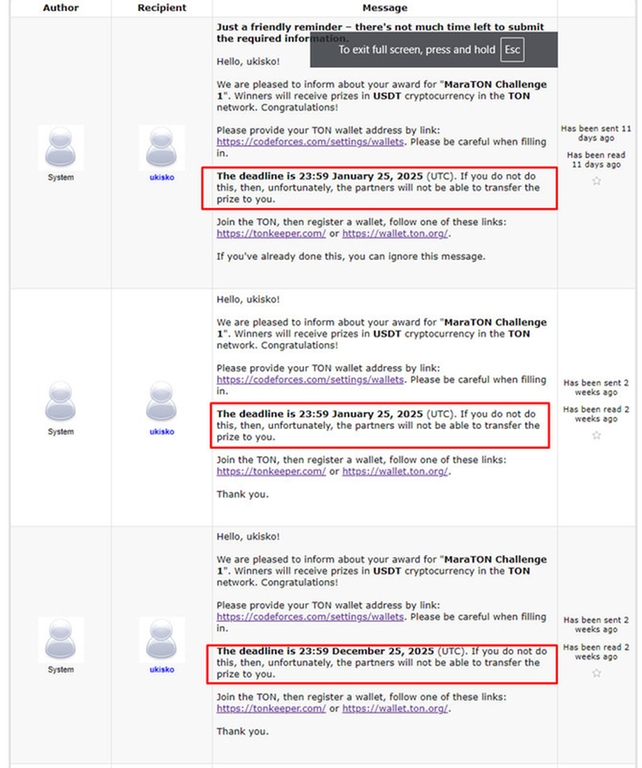

Hi, when will the prize money be delivered? Or did I link the wallet incorrectly? If anyone has any information, please let me know. Thanks.

I don't know why but there's always a 2~3 weeks' delay in the prize delivery.

For example, I recieved prize for CodeTON Round 9 on December 11, and the round was held on November 23.

Hello, Everyone! Please help me.

I'm was award 5rd at "MaraTON Challenge 1".

But I have submitted Ton Wallet address today. Can I get prizes of "MaraTON Challenge 1"?

The first prizes deadline notice was** 23:59 December 25, 2025 (UTC)**

Please let me know it.