UPDATE: the new rating system paper will appear in the Web Conference 2021!

If you're new to competitive programming, you may be wondering: what are ratings and colors? What do they mean?

As a contestant and now coach of the UBC team, I've taken enough interest in the subject to have developed my own rating system, Elo-MMR, which I might describe in a future blog post. For now, I want to talk about ratings more generally: what does it mean to achieve a certain rating or title? How concerned should you be with your rating and title? Might it be harmful to be concerned with them at all?

A Brief History of Contest Ratings

Contest rating systems can trace their heritage back to the Elo system. Elo was devised for 2-player games, with rating updates based on whether a player wins, loses or draws. Starting in 1960, it was adopted by the chess community to numerically estimate the skills of players based on whom they won or lost against.

The first major online venue for competitive programming, TopCoder, was founded in 2001. It generalized Elo to allow for matches in which an arbitrary number of players are ranked. Players would see their "handles" (a sort of nickname or username) colored according to rating ranges: 0-899 is grey, 900-1199 green, 1200-1499 blue, 1500-2199 yellow, and 2200+ receive the coveted red color. Players rated 3000+ get an additional white dot inside their red icon, like a bull's-eye, inspiring colloquial usage of the title "target" to refer to these dozen or so top programmers in the world.

The leading competitive programming site in modern times, Codeforces, arrived on the scene in 2010. Its rating system associated not only colors to numerical ranges, but also named titles. In the spirit of peaceful sportsmanship, the old militaristic titles were discarded in favor of chess-style titles in 2011's November Revolution of Colors and Titles, which received further updates in later years.

Rating Statistics

This table summarizes the present-day titles alongside some statistics. The numbers refer to subsets of the 99832 players who've competed on Codeforces in the past 6 months, as of May 30, 2021, rated according to the Elo-MMR system which I use with the UBC team. The full list of ratings and source code are accessible here. Official Codeforces rating statistics are similar, and accessible here. Using optimized parallel algorithms, it took about half an hour to simulate the entire history of Codeforces on a modest laptop; it can be made even faster if subsampling-based approximations are used.

| Elo-MMR | Title | Division | Number | Percentile | CF at same rank (spread) |

|---|---|---|---|---|---|

| 3000+ | Legendary Grandmaster | 1 | 8 | 99.99 | 3382+ |

| 2700-2999 | International Grandmaster | 1 | 37 | 99.95 | 3010-3329 (372) |

| 2400-2699 | Grandmaster | 1 | 255 | 99.7 | 2565-3010 (445) |

| 2200-2399 | International Master | 1 | 560 | 99.1 | 2317-2565 (248) |

| 2000-2199 | Master | 1 | 2089 | 97 | 2088-2317 (229) |

| 1800-1999 | Candidate Master | 2 | 3968 | 93 | 1804-2088 (284) |

| 1600-1799 | Expert | 2 | 7103 | 86 | 1564-1804 (240) |

| 1400-1599 | Specialist | 3 | 11003 | 75 | 1328-1564 (236) |

| 1200-1399 | Apprentice | 3 | 16909 | 58 | 1104-1328 (224) |

| 1000-1199 | Pupil | 4 | 23977 | 34 | 818-1104 (286) |

| Up to 999 | Newbie | 4 | 33923 | 0 | Up to 818 |

Codeforces equivalents in the last column were obtained by finding which Codeforces ratings correspond to the same world ranks as the Elo-MMR ratings in the first column. Divisions are suggested ones using Elo-MMR.

One interesting finding is that the 1800-1999 Elo-MMR range (Candidate Master) corresponds to a wider Codeforces range than the levels either immediately above or below. While I haven't yet tested whether that's the case, it's suggestive that Divisions 1 and 2 might be better-separated in my system: that is, an in-between player's rating updates aren't unduly advantaged when competing in the weaker division.

Interpretations

Newbie

The start of everyone's journey. At this stage, you might be new to programming. You'll have to become familiar with the control structures and core libraries of your chosen programming language. You might wonder if it makes sense to participate in the competitive programming community at this stage. In my opinion, it's never too early to join!

You might start with sites such as LeetCode which are more oriented toward basic knowledge and professional development, rather than competition and problem solving. Nonetheless, with the introduction of Division 3 rounds, Codeforces is a welcoming environment as well. Some people enjoy learning a programming language by attempting small, self-contained problems.

Pupil

Now you know how to write working code, and perhaps you've taken your first data structures course. However, you don't often know when to apply standard library data structures, or algorithmic techniques such as dynamic programming.

It bears mentioning that the disciplines of computer science and software engineering are so vast, that it's quite possible to be a successful professional in your specialization while still being a Pupil on Codeforces. Contest skills which you may wish to develop include: algorithmic fundamentals, mathematical problem solving, and speed and precision of implementation. In my Pacific Northwest region, we prepare Division 2 contests (roughly equivalent to Division 3 on Codeforces) to provide a fun and educational experience for novices.

Apprentice

This is a new tier I added. To me, the word "Apprentice" suggests something between a student (aka Pupil) and a professional (aka Specialist). An Apprentice has completed enough basic training to apply their skills in the real world, with some help. With some additional mentorship, they will eventually become a self-sufficient specialist in their trade. At this level, you're comfortable with some basic techniques and looking to further extend your skills.

Specialist

You've made it! You are applying algorithms and data structures at a professional and competitive level. If your motivation was professional development or job interview preparation, this range might be your ultimate goal. When it comes to algorithmic software engineering interviews, you'll be a strong candidate, even at some of the most prestigious technology companies.

Expert

You have algorithmic expertise exceeding that of a typical professional. As such, students and colleagues may refer to you for guidance. In some local circles, you might be considered an algorithms guru of sorts. On the other hand, your ambition may have driven you to surround yourself with even stronger algorithmists! Perhaps you're thinking seriously about competing internationally, at events such as the IOI or the ICPC World Finals. In that case, your journey has only just begun...

Candidate Master

Welcome to Division 1! As a pre-requisite to the esteemed title of Master, you are deemed eligible to prove yourself by competing alongside the best of the best, on the toughest problem sets that Codeforces offers. Professional whiteboard interviews cease to scare or even challenge you; now they're just an opportunity for you to flex over interesting problem discussions.

Master

Congratulations! At this point, Division 2 contests are no longer rated for you, and probably not that interesting to you either. To signify the magnitude of your achievement, there's a sharp transition from the bottom of the rainbow toward the fiery colors at the top. You are a formidable competitor in your region. In most regions of the world, you have a strong chance of advancing to the IOI or the ICPC World Finals.

International Master

Similar to Master, only that you're considered formidable even on the international stage.

Grandmaster

The coveted red color comes with considerable respect, even fame, in the competitive programming community. Other competitors, total strangers to you, may recognize your handle and come to you for advice. People aspire to know even a fraction of what you know. Your fast wit is awe-inspiring. You might try to win a medal at the ICPC World Finals.

International Grandmaster

Similar to Grandmaster, only now your fame extends internationally. Your handle is familiar to the entire competitive programming community. A team of IGMs would be slated among the favorites to win ICPC outright.

Legendary Grandmaster

Similar to Grandmaster, only now your fame extends internationally and across time as well. Your achievements are of historic importance to the community, pushing the limits of what's thought to be possible. Colloquially, your color is a variant of red called "nutella": analogous to the "targets" of TopCoder, the white bull's-eye is substituted by a black first letter in the style of the Nutella logo.

This is another title that I once suggested, and was eventually added. We really just needed a shorthand for "programmers who stand a chance against tourist" :P

Concluding Remarks

So, should you be concerned with your rating? I suggest to relax a bit. If you worry too much about losing points on a bad day, you might decide to skip contests on any day in which your mental preparation is less than perfectly optimal. While this may rescue your rating in the short-term, such an attitude will slow your progress in the long-term. The obsession to optimize one's rating can be counter-productive and cause hurt feelings.

Having said that, having your rating on the line can be a good motivator during a contest, simulating some of the pressure of a major event such as an ICPC regional. In addition, now that you understand what the titles mean, ratings are a nice way to track your progress and feel good about the cumulative effect of your training. You've earned it! Just as in long-term stock investment, resist the urge to react to daily fluctuations: focus on the big picture!

Finally, keep track of your motivations, whatever it is that you hope to get out of the experience: be it to prepare for whiteboard interviews, to be exposed to ideas for computer science research, to play a competitive mental sport, to meet other problem solvers, or just to keep your mind active with fresh puzzles. Ratings may correlate with these things, but of course they're not everything. For good or ill, we tend to rank people a lot in our schools and workplaces. At least here, we all know that this is fundamentally a game we're playing, and the criteria and methods for success are well-publicized. Good luck and have fun!

I get higher position so I'm happy!!!

So, how does the system work?

Congrats :D

I suppose I should get to that topic soon! I wrote a paper describing it in the linked repo, but I admit it's not very well-written right now.

UPDATE (May 2020): it's written now, I added the link!

Wow, the description for Expert somehow is quite motivating even when I know I am still mediocre at competitive programming right now.

Very informative post.

It's easy to downplay the meaningfulness of Expert when Codeforces has so many titles above it, but I think it's important to put things into perspective: it's a skill the vast majority of professionals don't have even at Google, whose interview process is famously (or perhaps infamously, for those who disagree with the practice), contest-like! It's much more common to work on LeetCode or Hackerrank, where the problems are more standard, like something from a textbook rather than a contest. So, cheers to you!

Well I suppose it doesn't take much to become an Expert either. Doing 4-5 problems from Div. 3 contests fast enough will make you Expert in 2-3 contests.

I am preparing for interviews right now, and I actually find these "textbook" questions on a level harder than the ones I am able to solve on Codeforces.

Hmm perhaps, but you get more help in interviews, right? I don't know if it's different in India, but it seemed to me that Specialist students in Canada tend to place well in ICPC regionals and get nice internships in the Silicon Valley. Of course, their preparation wouldn't consist solely of contests.

Yes, that's almost true of India as well. I suppose I have an explanation for this. The problems asked in contests are not straightforward. For example, you can practice standard interview dynamic programming questions (Kadane's algorithm, Longest Common/Increasing Subsequence and variations, etc.) but you wouldn't be able to do any DP questions on a Codeforces contest just by this preparation. So, someone who has some knowledge of DP, and someone who has no knowledge — both can't solve that DP question, and their ratings will in general be similar. Same with Segment Trees etc.

So people with similar rating can have drastically different knowledge of data structures and algorithms.

In fact, I have mostly solved Ad-Hoc problems fast enough to become Specialist, and when I got a contest, where by chance I could solve one of the tougher questions (usually Math or some non-trivial Greedy/implementation problem), I became Expert.

These interpretations are really nice!!

Good post :)

I want to add that these interpretation don't work if you solved too few contests (less than 5 or so), especially for low-rated coders. Many people took part in 1-2 contents, lost some rating, but didn't reach their actual rating. This also explains why there are more pupils than newbies on CF.

Thanks! I'll have to properly explain Elo-R at a later date, but one modification is that displayed ratings are actually

mu - 2*(sigma - sig_limit), where sigma starts at 350 and eventually approaches sig_limit = 80. Thus, unrated players are at 960 instead of 1500, rendering even the lower titles somewhat of an achievement :)So the “real rating” is still 1500?

If by "real rating" you mean the center, yes. But since the belief distribution is so wide, we can't say with any confidence that their skill is "really" 1500. Microsoft's TrueSkill does this as well. The high starting sigma allows ratings to converge very quickly in the first few rounds.

So you're trying to do a lower bound estimation, such that you can guarantee a X% likelihood for a person to be of their displayed rating or above, right?

Have you looked at Bayesian Elo? I.e. calculating Elo using a maximum likelihood estimator. I think it's a great way to improve convergence of classical algorithms and also get a good error estimation. If you haven't already seen it, I suggest you check out Whole History Rating which makes use of that. I also have an implementation if you want to try it.

Thanks for the paper! My system is a Bayesian approximation as well. Incremental systems are less accurate, but global updates compromise interpretability and consistency: we might not like to retroactively change players' rating histories based on recent contests they didn't participate in!

Elo-R takes advantage of some properties of programming contests to try to get the best of both. Most of my proposed improvements come from making more principled approximations with the logistic distribution, which help with convergence and outliers. Some of the issues noted in the paper have negligible impact on programming contests: for example, we don't have isolated cliques of competitors that only play against each other. Divisions are very large and overlap substantially, so estimates of performance within a round are fairly reliable without retroactive adjustments. However, the system does store many past performance scores per player, instead of just a rating and standard error.

Yes, WHR as a whole is definitely not the right fit here. Partly because of unnecessary features and partly because the complexity, especially retroactivity can be very confusing to users. I just thought some concepts might still be interesting, if not for ranking users directly but simply for making nice comparisons.

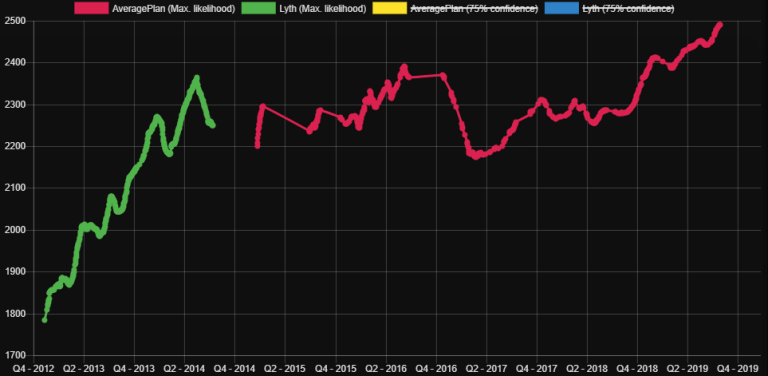

As a little inspiration, here's an example plot done with WHR in a 1v1 setting, comparing two accounts controlled by the same person: Source

Source

Thanks to the retroactivity it is usually easy to differentiate quick learners from people who've had previous experience. This is with the expected elo variance per day set to 500, instead of 14 as suggested in the paper. Still, the graph can smoothly model periods of skill change as well as stagnant phases.

I like the descriptions but I wouldn't take them too seriously in relation to IOI/ACM. Coming to codeforces after having done both I do feel that the problems here are noticeably different. This is to be expected seeing that purely algorithmic tasks on here wouldn't be much more than a test of your templates.

Sorry, is it rated?

I increased the thresholds at the top, for symmetry reasons and also to future-proof against the gradual rise at the top!

UPDATE (May 2020): 9 months later, the number of Elo-R IGMs remains the same, 6! I'm sure there will be more as the community gets stronger, but at least there doesn't seem to be rampant inflation. In fact, the mean rating is slowly decreasing. Having said that, it's tough to reliably compare the strengths of past vs present contestants...

Thank you for such great explanation !!

In previous div 2 contest i saw that after submitting same problem and right answer why i got more rating than my friend is there any other criteria for thus different rating i got +65 and he got just +24 rating i want to know why please help me?

Me: I am so stupid, I read a question wrong and stress half a day over it.

Some random blog on CF: So you are an expert, You have algorithmic expertise exceeding that of a typical professional. As such, students and colleagues may refer to you for guidance. In some local circles, you might be considered an algorithms guru of sorts. On the other hand, your ambition may have driven you to surround yourself with even stronger algorithmists! Perhaps you're thinking seriously about competing internationally, at events such as the IOI or the ICPC World Finals. In that case, your journey has only just begun...

Really felt good after reading this.

Also gained motivation for doing better than our previous best. Thanks a lot.

Felt like, as if they were like zodiac signs being described.

Is there a way to see the updated stats?

The Codeforces stats and ratings list are already updated! The repo also allows you to compute all the ratings yourself if you like. Please note that the algorithm has been tweaked slightly from the first version of this blog post.

Thanks

Is it just me or does anyone else feel that it would be more suitable to give the top three ranks a different color? Like Legendary Grandmasters could be scarlet, IGMs could be cranberry red and Grandmasters could be peach?

Similar for the masters and the international masters, the international masters could take up the orange color to distinguish themselve from candidate masters.

Can we recreate the percentile table with modern data ?

Truly agreed, getting expert in 2023 is different from getting expert in 2019. You can’t just have a lucky div3 from a new account and that’s it

At least for now those descriptions of Codeforces titles make sense

Is there an query in api for getting current cf rank(current rating based rank) or even a method to calculate rank from rating knowing current number of active user would be helpful

One should participate in contests mindfully. I mean what's the point in participating every day ? I dont think,one can see drastic improvement or can digest previous contest takeaways in less than 24 hrs weekly atmax 2 should be upperbound

there isn't a single contest I didn't learn anything

Nobody learns in the contest.Everyone learns after the contest. That's what Editorial is for,contest is for improving the skill to apply your skills in a quick way But if you do contest everyday it becomes practice,not contest.

from contest you learn what your weak points are and understand whether you current practice strategy is efficient enough and whether you need to change it , your approach towards a contest , mindset etc. things grow by giving contests real time , it's not always learning about the problems you attempted also about learning how you attempted them during contest , for example I can solve any problem in the world of $$$R\le2000$$$ during practice but do I solve all of them during contest time ? A big no

once I gave a lot of contests in a timeframe of about 6 months and I didnt move even an inch In None of the contest I was even unable to do even 3 problems. I lost intrest nor do I enjoy it If you like getting kicked everytime for improving speed then go ahead nobody stopping you and by the way if you stop getting kickeed,you are back to square zero A lot of people might care or value about that big No but I'm not one of them

Forget about learning,I even participated until to a point that I even started disliking problems of those contests. Even now, problems which I might have liked during practise might definetly not be liked by me if I have seen during the contest It has nothing to do with the problem, its just the way one encountered the problem Its almost similiar to which style of practising you prefer, some might prefer jump to editorial after certain timelimit or one might prefer to see editiorial until he has no more ideas left. Agree or not the first way has a bit of contest flavour in it unlike the second

It's useful to treat most contests as practice, but it sounds like you need a break. You don't have to compete at all if what you enjoy is solving problems slowly. That's probably more useful in real life applications anyway.

I got just anger,irritation and dislike of problems and i dont remember other than that. There is no guarantee that problem I have seen in contest can be solvable by me again, Even I questioned to a point that "Am I learning ?"

Add Tourist Title For Elo-MMR 4000+

True.Rank List need to be update now

well said