In this tutorial I'll introduce potentials as it applies to directed graphs and discuss a few of its applications. I haven't seen many resources about this topic, so I decided to write one.

Prerequisites:

- Shortest path algorithms (Dijkstra and Bellman-Ford)

- For the Min Cost Max Flow section, you should be familiar with Ford-Fulkerson. But you can benefit from this blog with just the Johnson's Algorithm section if you're not familiar with flows.

Introduction

For notation, let's say there is a weighted, directed graph $$$G=(V,E)$$$. Let $$$n=|V|$$$ and $$$m=|E|$$$. For simplicity, let's assume there are no loops or multiple edges between the same pair of nodes in the same direction. For an edge $$$u\to v$$$, we will use $$$w(u\to v)$$$ to denote its weight.

Let's motivate the idea of a potential, so the definition doesn't seem to come out of nowhere. In shortest paths, you may be aware that negative edges create a lot of issues. First, it's possible that negative cycles exist. In that case, it's possible for a path to have arbitrarily small weight, and we say that $$$\mathrm{dist}(u\to v)=-\infty$$$. (By the way, we allow a path to revisit vertices. If we required a simple path, then it's NP-complete.)

Even if there are no negative cycles, the negative edges are still problematic for us. Dijkstra's algorithm can give incorrect results, and we typically use Bellman-Ford instead, which has a worse complexity. Dijkstra's is $$$O(m\log n)$$$ while Bellman-Ford is $$$O(mn)$$$. Technically, Dijkstra's algorithm can be improved to $$$O(m+n\log n)$$$ with Fibonacci heaps, but it's not very important for the purposes of this blog. I mention it anyway in case you're confused why other resources have a different complexity.

One possible idea to deal with negative edges is to "reweigh" the graph so that all weights are non-negative, and it still encodes the information of shortest paths in the original graph. Obviously, we can't just replace the weights with whatever we want. One way we can modify weights is to pick a vertex $$$v$$$, increase the weights of incoming edges to $$$v$$$ by some real value $$$x$$$, and decrease the weights of outgoing edges from $$$v$$$ by the same amount $$$x$$$. This way, any path that goes through $$$v$$$ will have the same weight as before. Therefore, the only affected paths are the ones that begin or end at $$$v$$$. But we can observe that any path beginning or ending in $$$v$$$ will just be offset by $$$x$$$, and a shortest path will remain the shortest.

Now, we have the motivation to define a potential. The idea is that we want to apply this kind of vertex offset for all vertices independently in such a way that all the new weights are non-negative.

Definition: A function $$$p:V\to \mathbb{R}$$$ is called a potential of graph $$$G$$$ if for every edge $$$(u\to v)\in E$$$, it holds that $$$w(u\to v)+p(u)-p(v)\ge 0$$$.

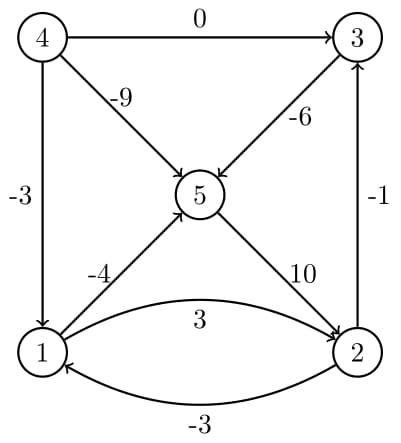

For an example, consider this graph:

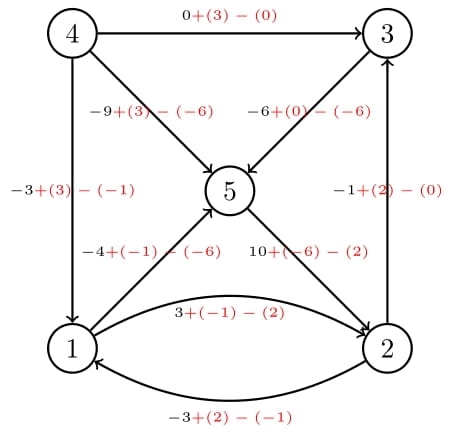

Let's apply the potential $$$p$$$, where $$$p(1)=-1,\ p(2)=2,\ p(3)=0,\ p(4)=3,\ p(5)=-6$$$, and confirm that the new edge weights are all non-negative.

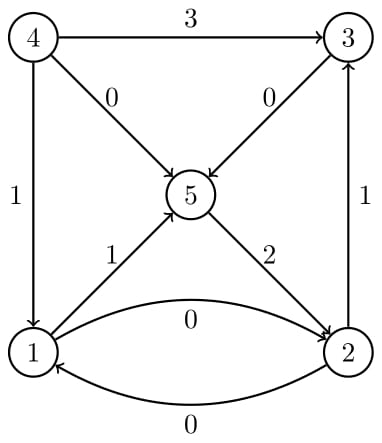

Since the new edge weights are indeed all non-negative, $$$p$$$ is a valid potential. Now, let's confirm the fact that information of path weights is preserved. For example, take the path $$$4\to3\to 5\to 2\to 1$$$. In the original graph, it has weight $$$0-6+10-3=1$$$. In the new graph, it has weight $$$3+0+2+0=5$$$, and we expect it to be offset by the difference of the endpoints' potentials, $$$p(4)-p(1)=3-(-1)=4$$$, which is correct.

Note that if a graph has a negative cycle, then you cannot create a potential for it. This is because the weight of a cycle is unchanged by the reweighing.

Johnson's Algorithm

Given our graph $$$G$$$, we would like to compute length of the shortest path from $$$u$$$ to $$$v$$$, for all pairs $$$(u, v)$$$. For simplicity, let's assume there are no negative cycles in the graph. Perhaps the best algorithm you know about for this problem is Floyd-Warshall, which works in $$$O(n^3)$$$. (It works correctly for negative edges).

Johnson's Algorithm solves this problem more efficiently for sparse graphs, and it uses the following steps:

- Compute a potential $$$p$$$ for the graph $$$G$$$.

- Create a new weighting $$$w'$$$ of the graph, where $$$w'(u\to v)=w(u\to v)+p(u)-p(v)$$$.

- Compute all-pairs shortest paths $$$\mathrm{dist}'$$$ with the new weighting.

- Convert the distances back to the original graph, with the equation $$$\mathrm{dist}(u\to v)=\mathrm{dist}'(u\to v)-p(u)+p(v)$$$.

We need to go into more detail about how steps 1 and 3 can be done. Step 3 is easier to understand, so let's address it first. The weighting $$$w'$$$ is all non-negative, so we can use Dijkstra's algorithm to get correct distances. We simply run Dijkstra's algorithm once for every vertex $$$u$$$, to get the distances from $$$u$$$ to every other vertex. Since we run Dijkstra's algorithm $$$n$$$ times, and each time takes $$$m\log n$$$, the complexity for this stage is $$$O(nm\log n)$$$. Technically, it can be improved to $$$O(n(n\log n+m))=O(n^2\log n+nm)$$$ if you use Fibonacci heaps.

Now, how do we do step 1? The key idea is that shortest path values themselves satisfy the requirement of a potential! In fact, let's fix some vertex $$$s$$$, and assign $$$p(v)=\mathrm{dist}(s\to v)$$$ for all vertices $$$v$$$. Assume for contradiction that $$$p$$$ is not a potential. Then there is some edge $$$u\to v$$$ such that $$$w(u\to v)+p(u)-p(v)<0$$$. This means that $$$\mathrm{dist}(s\to v)>\mathrm{dist}(s\to u)+w(u\to v)$$$. This means that if we take the shortest path from $$$s$$$ to $$$u$$$, then take the edge from $$$u$$$ to $$$v$$$, the total weight will be smaller than the shortest distance from $$$s$$$ to $$$v$$$. Clearly, this is impossible, so $$$p$$$ is in fact a potential.

Actually, I lied to you. The above proof is only correct if the chosen vertex $$$s$$$ can reach all vertices $$$v$$$. Otherwise, we would have infinite potentials which is not allowed by the definition. But we're almost there. Let's simply create a brand new vertex $$$s$$$, and create an edge $$$s\to v$$$ with weight $$$0$$$ for every vertex $$$v$$$. We will define the potential based on the shortest distance from $$$s$$$, and it will be correct. Note that the property of a potential is still valid if we delete some vertices and edges, so it's not a problem to remove $$$s$$$ after we compute the potential. Also note that when we add $$$s$$$ and those extra edges, we do not create a negative cycle since no edges are incoming to $$$s$$$.

Now, you might be thinking, "wait, I thought the point of a potential is to help us compute shortest paths, and now you're telling me we need to use shortest paths to compute the potential? I want my upvote back!" But remember, we're trying to compute all-pairs shortest paths. Running an algorithm like Bellman-Ford from every starting vertex would be expensive. But here, we're only running such an algorithm once, from the new vertex $$$s$$$. Then we use a more efficient algorithm (Dijkstra) for the all-pairs part. So for this step 1, we just run Bellman-Ford once on the graph with the new vertex $$$s$$$. The complexity of step 1 is $$$O(nm)$$$.

Overall, step 3 is the bottleneck and Johnson's algorithm has complexity $$$O(nm\log n)$$$ (or $$$O(n^2\log n + nm)$$$ if you use Fibonacci heap).

Min Cost Max Flow

I will present the minimum cost maximum flow (MCMF) problem, show how to solve it, then show how potentials can make it faster. If you want formal proofs, I recommend resources below. My proofs here will be business casual.

We are given a directed graph $$$G=(V, E)$$$. There are two special nodes $$$s$$$ and $$$t$$$, called the source and sink. Every edge $$$u\to v$$$ has a capacity $$$c(u\to v)$$$ and a cost $$$w(u\to v)$$$. A flow is defined as an assignment $$$f:E\to \mathbb{R}$$$ such that:

- For every edge $$$u\to v$$$, the flow sent along this edge is non-negative and does not exceed the capacity. Formally, $$$0\le f(u\to v)\le c(u\to v)$$$.

- For every vertex $$$u$$$ ($$$u\ne s$$$, $$$u\ne t$$$), flow-out equals flow-in. Formally,

The value of a flow is defined as $$$\sum\limits_{u:(s\to u)\in E}f(s\to u)$$$. The cost of a flow is defined as $$$\sum\limits_{(u\to v)\in E}f(u\to v)w(u\to v)$$$.

The maximum flow problem simply asks to maximize the value of the flow. The MCMF problem asks us to find the minimum cost flow among all flows with the maximum possible value.

Let's recall how to solve the maximum flow problem with Ford-Fulkerson. We iteratively send flow from $$$s$$$ to $$$t$$$ by finding an augmenting path, which is a path of edges from $$$s$$$ to $$$t$$$ using only edges that are below full capacity. Also, we include reversed edges that allow us to send flow "back". We can find such a path with DFS.

In MCMF, we do almost the same thing, except that we must find the shortest path each iteration. A forward edge $$$u\to v$$$ has weight $$$w(u\to v)$$$ and the corresponding back edge has weight $$$-w(u\to v)$$$. We ignore edges at full capacity (and back edges whose corresponding forward edge has no flow to send back). Casually, we can understand that shortest paths will be correct by induction. If we have an optimal flow with value $$$k$$$, then consider the optimal flow with value $$$k+1$$$. The difference will include a path from $$$s$$$ to $$$t$$$ and some cycles. A cycle cannot cause a decrease in cost, otherwise we can find a better flow with value $$$k$$$. So it's optimal to just augment a path. In particular, an optimal augmenting path must be the shortest with our given weighting.

Since this graph has negative edges, we might use Bellman Ford each iteration. But with potentials, we can do better. We already know that if we're given a potential for the graph, then the shortest path can be computed easily with Dijkstra. But when we send flow along an augmenting path, it changes which edges are at full capacity, which can make the potential invalid for the next iteration.

How can we compute the potential for the next iteration more efficiently than running Bellman-Ford every time? It turns out that we can use the shortest path results from the Dijkstra to assign the potentials for the next iteration for free. This works because the shortest distances create a potential for the edges involved, as mentioned in the Johnson's algorithm section. So we just need to check that the edges that change from sending flow do not create an issue. Suppose an edge becomes full capacity. Then it is removed from our graph for shortest path computations, and it cannot make the potential invalid. Suppose an edge was at full capacity but not after sending flow along the augmenting path. In this case, the reversed edge satisfied the inequality before sending flow, which automatically means this edge satisfies it (since $$$w(u\to v)=-w(v\to u)$$$).

Now, given a potential for one iteration we can compute it for the next iteration for free. But what about the first iteration? In many cases, the costs are all non-negative and we can just use $$$p(u)=0$$$ for the potential. Otherwise if there are no negative cycles, we can run Bellman-Ford to get the initial potential. I'm not aware of how to deal with negative cost cycles, and I haven't yet seen it appear in a problem. If you're knowledgeable about negative cycles in MCMF, I'd love to hear about it in the comments.

Ignoring a possible Bellman-Ford or similar to compute the initial potential, the complexity is $$$O(F(m\log n))$$$. With Fibonacci heap, you get $$$O(F(m + n\log n))$$$. Note that for very dense graphs, it's optimal to do the simple $$$O(n^2)$$$ Dijkstra algorithm which doesn't use any heap.

Resources

- Johnson's Algorithm (Wikipedia)

- Hungarian Algorithm (Wikipedia) Special case of MCMF with potentials

- Minimum Cost Flow without potentials (cp-algorithms) I believe their complexity analysis is wrong, but otherwise a good resource.

- Minimum Cost Flow lecture notes This includes potentials and more formal proofs.

my old comments :( go stalk someone else

Put a spoiler on it. No one wants to see a selfie as the first comment after an interesting tutorial.

stop reading

Revealing my face as a desperate attempt for upvotes is my job!

me while pressing downvote on your comment

happy that bellman ford (and spfa) are finally obsolete.

Note that this definition of potential is (up to a sign convention difference) the same as the definition of a monotone heuristic, and performing Dijkstra's algorithm on the graph modified by a given potential is the same as performing A* with the corresponding heuristic function.

EDIT: Today I learned * in links don't work.

Please don't get Dijkstracted. :) It's a great tutorial.

Just curious, from where do you guys read stuff like this? I mean, the only good source I know of is cp-algorithms. But on CF, people are actively writing blogs(which I appreciate) with topics that I cannot find much on Internet. Please share if there are sources from where I can read stuff that's a bit less known, and useful.

Experience from solving problems, reading editorials, talking with others, and other resources like books and lecture notes.

Either that or a secret website only reds know about. Pick your theory.

Well, I was thinking that maybe there are some research papers or other stuff on DSA that I might not be aware of. But i guess that's not the case after all?

Maybe the conspiracy that there is a secret website that only reds know about isn't so far off? ;)

I learnt this topic when doing some opencup — I was kind of amazed that we can do flow with $$$n \leq 2*10^5$$$ (before, I used to use flow as a black box for small constraints). This opencup was uploaded on the gym — the problem is here.

I also remember discussing MCMF with potentials with you a while back and I had uploaded my template code over here (with a sample submission if anyone feels like using it).

Nice blog.

One doubt related to MCMF part. Suppose at some iteration, some vertex u becomes unreachable from s which makes its distance infinity. In that case, how would we assign potential to that vertex in the next iteration?

If a vertex becomes unreachable then it will also be unreachable for future iterations. So it doesn't matter what we assign for its potential.

818G - Four Melodies Related problem.

I didn't get this part.

For the reverse edge $$$(v, u)$$$ we have that the potential condition holds $$$x = w(v, u) + p(v) - p(u) >= 0$$$.

For the forward edge $$$(u, v)$$$ we have that $$$w(u, v) + p(u) - p(v) = -x <= 0$$$ which mean it does not hold?

This had me confused for a while too. Then I realized that both $$$w(v,u) + p(v) - p(u)$$$ and $$$w(u,v) + p(u) - p(v)$$$ are actually going to equal $$$0$$$. The reason is this: The only possibly problematic edge would be an edge $$$(u,v)$$$ which had no capacity to send flow, but we now send some flow through $$$(v,u)$$$ and so $$$(u,v)$$$ gets some new capacity. But notice that if we are sending flow through $$$(v,u)$$$ it's because it was part of the shortest path, and therefore we know that $$$d(s,u) = d(s,v) + w(v,u)$$$, which rearranging it gives $$$w(v,u) + d(s,v) - d(s,u) = 0 \geq 0$$$. And by multiplying by $$$-1$$$ we get $$$0 = -w(v,u) - d(s,v) + d(s,u) = w(u,v) + d(s,u) - d(s,v) \geq 0$$$.

And so it is correct to define $$$d(s,x)$$$ to be our new potential $$$p(x)$$$.

Great tutorial!

I'd like to add some information on something that you mentioned at the end of your blog.

One possible way to deal with negative cycles is using the cycle cancelling algorithm which is based on, first finding any maximum flow, and then finding negative cost cycle in the residual graph and pushing flow through them. However, its complexity is something like $$$O(nm \times totalCost)$$$, where $$$totalCost = \sum_{e \in E}{cap(e) |cost(e)|}$$$, which is also bounded by $$$mUC$$$, where $$$U$$$ is the maximum capacity and $$$C$$$ the maximum cost in absolute value. This algorithm is also explained in the Lecture notes that you put in the references.

Relevant discussion about MCMF with negative cycles, as well as faster algorithms, can be found in this blog.

One comment of that blog also mentioned a problem where you can test this algorithm (the problem is in Chinese, but it is simply asking for the MCMF with negative cycles). Although the constraints seem too high for the cycle cancelling algorithm, I was able to get AC with it (I used Dinic's algorithm to find the initial flow).

Great tutorial! Kinda strange I didn't see it earlier.

One small point I would like to add to it: Ford-Bellman is not the only method to compute potentials for the first iteration. In some problems, the flow network is acyclic if we don't consider back-edges — and in these cases, we can prepare initial potentials with dynamic programming instead of Ford-Bellman. This usually helps if the problem asks to find a small flow (for example, if the flow value is at most $$$k$$$, the solution would work in $$$O(k m \log n)$$$ or $$$O(k n^2)$$$, without $$$O(nm)$$$ coming from Ford-Bellman). Note that the network has to be acyclic before the first iteration though.

Another Practice Problem for Johnson's Algorithm https://atcoder.jp/contests/abc237/tasks/abc237_e

How to generate all paths that resulted in the final flow/cost answer?