To whom it may concern,

Today I was reading some recent problems when I stumbled upon this div2B. 1956B - Nene and the Card Game. At first, it seems like an innocent game theory problem, until I read the following sentence.

Nene is very smart so she always selects cards optimally in order to maximize her score in the end of the game (after $$$2n$$$ rounds). If she has several optimal moves, she selects the move that minimizes your score in the end of the game.

This struck me as a bit odd, because in most game theory problems on Codeforces, it's a win/lose/draw game, or the player wants to maximize the difference between their score and their opponent's score, or they want to maximize their own score and all outcomes have the same sum of scores for the two opponents (which is equivalent). But Nene's objective is most peculiar, where she doesn't care about how many points her opponent has at all, until she cannot get any more for herself and then it is the only thing she cares about.

Now, what does the problem say is the strategy of Nene's opponent (you)?

...what is the maximum number of points you can get by taking your turns optimally?

Hmm, it seems that you do not care about Nene's score, although she cares about your score. Oh well.

At this moment I thought, "is this problem even well-defined?" What does an optimal strategy mean in this game? We seem to take it for granted that it even makes sense to talk about an "optimal strategy" at all. There are a few possible concepts of what an optimal strategy could mean. Maybe it means playing the move that gives you the best guaranteed score, assuming the worst case for your opponent's decisions. Or maybe it means playing the move that gives you the best outcome assuming that your opponent is rational and plays optimally. Yet another interpretation could involve Alice and Bob agreeing to collaborate, if they see a path that makes them both better-off than if they competed.

In most game theory problems on Codeforces, we are dealing with a two-player, zero-sum (or constant-sum), turn-based, finite, perfect-information game. In these cases, multiple interpretations of optimal play turn out to be equivalent. The one where you try to optimize your guaranteed score is called Maximin and the one where you try to optimize your score assuming a rational opponent is called Minimax. Both of these solution concepts are equivalent for this class of games.

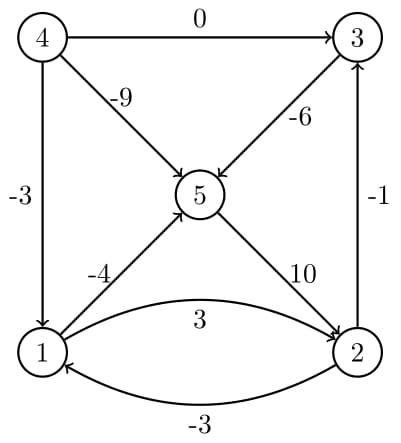

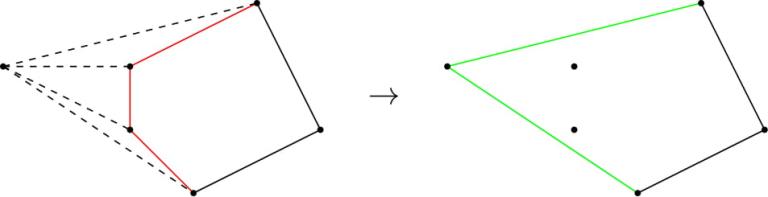

However the idea of "maximize your score first, then minimize your opponent's score second" seems to imply that we are outside of zero-sum territory (otherwise maximizing your own score would be enough). We cannot guarantee that Minimax = Maximin in non-zero-sum games. For example, consider this simple example game.

Alice goes first.

Choice 1: Alice and Bob both get 1 point, game ends.

Choice 2: Go to Bob

If Bob has a turn:

Choice 1: Alice gets 0 points, Bob gets 1 point, game ends.

Choice 2: Alice gets 2 points, Bob gets 2 points, game ends.

- Maximin: Suppose Alice wants to maximize her guaranteed score, assuming the worst case. Then she should make choice 1, because she can guarantee 1 point, while the worst case in choice 2 is 0 points.

- Minimax: Suppose Alice wants to maximize her score assuming that Bob is rational and will try to maximize his own score. Then she should go with choice 2, since Bob will rationally get 2 points for each of them, which is better than 1 point.

In this example, we see how non-zero-sum games can result in different optimal strategies depending on which convention we choose. In general, the problem statement would need to be clear about which convention we are using.

So, what about this div2B problem? Is it well-defined? Technically, yes it is. After analyzing the mechanics of the game, you will notice that the sum of scores of the two players is the same in all outcomes. In fact, for each type of card, one of them will be played first and the other will be played second, resulting in $$$1$$$ point for one of the players. So the sum of scores will always be $$$n$$$ in every outcome, meaning it's a constant-sum game in disguise.

So, what lesson can we take from my incoherent rambling? Mainly, optimal play in game theory is surprisingly nuanced. In my opinion, problems on Codeforces should be immediately clear that they are well-defined, and this div2B problem requires a key observation in order to realize that it is well-defined. Maybe when we have zero-sum games in the future, we should make it clear that it is zero-sum, even if it's just a statement like "We can prove that the sum of the players' scores is the same in all outcomes", and maybe linking to a resource about the definition of optimal play in zero-sum games.