... without doing much of anything.

I've been obsessed for the last two days about problem Data Centers from EGOI 2022. In particular, it has a subtask where $$$1 \leq N \leq 10^5$$$ and $$$1 \leq S \leq 5000$$$, where it seems $$$O(NS)$$$ wouldn't fit TL. I've been conjecturing that using treaps would amortize to good complexity, and after two days of burning grey matter, I've finally found a proof, and it's pretty cool to make a blog out of it.

The problem basically starts with an array $$$[v_1, v_2, ..., v_N]$$$, where $$$1 \leq v_i \leq V$$$ for all $$$i$$$. It then asks us to iteratively simulate the operation: "Subtact $$$x_i$$$ from the biggest $$$m_i$$$ elements.". All this while keeping the array sorted.

We'll show that just using treaps (or any BBST for that matter) yields a good amortized complexity. More exactly, we'll prove $$$O((N + Q) \log V \log N)$$$, and it should actually be better in practice.

Naive solution with treaps

Of course, the "naive" solution (which is much of what we'll implement here), does three things:

- Extract the $$$m_i$$$ suffix of the sorted array (we'll call it $$$B$$$, and the remainder $$$A$$$)

- Subtract $$$x_i$$$ from all elements of $$$B$$$

- Merge the resulting sequences $$$A$$$ and $$$B$$$

Using treaps, it's easy to see that steps 1 and 2 can be done in $$$O(\log N)$$$ via split and lazy propagation. Step 3 is the heaviest, requiring potentially $$$O(N)$$$ and maybe even $$$O(N \log N)$$$ steps depending on implementation.

Interweaving number

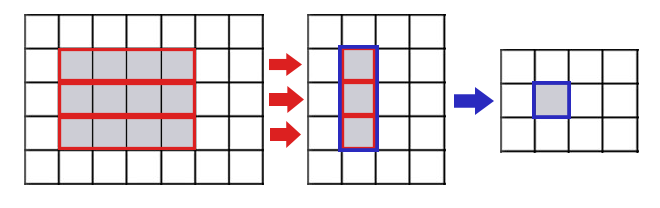

For a given merging process of sequences $$$A$$$ and $$$B$$$, let $$$k$$$ be the number of interweaving segments. See example below:

A = {2, 5, 15, 25, 30}

B = { 7, 9, 12, 21 }

In the example above, we have $$$k = 5$$$ interweaving segments (of lengths $$$2$$$, $$$3$$$, $$$1$$$, $$$1$$$, and $$$2$$$ respectively).

First, it is rather straightforward to write an implementation of merge on BBST that has complexity $$$O(k \log N)$$$ (via $$$k$$$ binary searches and $$$k$$$ splits).

Secondly, there can be at most $$$O((N + Q) \log V)$$$ merging interweaving segments over an array of size $$$N$$$ and $$$Q$$$ queries.

Potential method

For a given (increasing) sequence $$$A = [x_1, x_2, ..., x_n]$$$, let's define:

(all logarithms are base-2)

Let's suppose we are merging two sequences $$$A$$$ and $$$B$$$, and there are $$$k$$$ interweaving segments. See example below:

<-d1-> <--d2--> <--d3--> <---d4--->

A = {[--a1--], [-a2-], [--a3--]}

B = { [---b1---], [-b2-] }

In the picture above, the segments [-----] are placeholder to some sequence of elements. Numbers $$$d_i$$$ are the distances between numbers, e.g. $$$d_1 = first(b_1) - last(a_1)$$$.

The difference between potentials before and after merge $$$\Delta \pi = \pi (A) + \pi (B) - \pi (A \cup B)$$$ is:

Nonetheless, we can see that:

(more naturally, the "worst case" is when doing a Faro shuffle, i.e. interweaving element by element).

However, because $$$\log$$$ is concave, we can use that $$$\log (\frac{a + b}{2}) \geq \frac{\log a + \log b}{2}$$$. Reformulating, we obtain:

We'll add and subtract $$$\log (d_k + d_1)$$$ to inequality $$$(2)$$$, use inequality $$$(3)$$$, and simplify everything to finally obtain:

(thanks freak93 for the easy one-liner about concavity of $$$\log$$$ )

The initial potential of the sequence is bounded by $$$N \log V$$$, and each merge operation adds at most $$$\log V$$$ to the potential, yielding a total of $$$(N + Q) \log V$$$ potential. Then, the number of interweavings is at most $$$(N + Q) \log V$$$.

Extra

It's not hard to see the power of this result. In essence, you can do all sorts of operations like: adding/subtracting some value $$$x$$$ to an arbitrary subsequence, dividing subsequence by some value, square rooting subsequence, and so on. All while keeping the sequence sorted.

Also, doing $$$\log N$$$ binary searches on treaps might be inconvenient. Another approach that is easier to code and faster is to use the treap union function (source: Wikipedia):

function union(t1, t2):

if t1 = nil:

return t2

if t2 = nil:

return t1

if priority(t1) < priority(t2):

swap t1 and t2

t<, t> ← split t2 on key(t1)

return join(union(left(t1), t<), key(t1),

union(right(t1), t>))

Conclusions

- It feel like this is a bit more general method to this

- Replace join treap operation in your library with merge

- I feel like this trick is well known in some countries...