Doing VK Cup 2015 we faced with an interesting problem: how to calculate rating changes for team members?

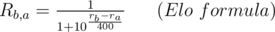

In short, currently the ratings are calculated using the following rules: * each contestant has some ratings ri before the round, * our goal is to follow Elo Rating System idea: participant a wins participant b with probability:

- as we know chances to win/loose for any pair of participants, we can calculate the expected place (seed) as a sum of winning probabilities,

- if participant took place better than expected then we need to increase rating, if worse then we need to decrease rating.

Above items are just some general rules, but we have some anti-inflation corrections and heuristics. BTW, we are moving forward to rethink rating formulas and open them. But the question now is not about that, but about how to calculate the rating of a team.

It is natural to generalize current ideas, but we need a method to calculate the rating of a team knowing ratings of members. If there is such function, say teamRatings(r1, r2) (for 2-member teams), it will be naturally to use it to calculate expected place of a team. And depending on actual place, member's ratings should be adjusted.

That's the idea came to mind of the Codeforces team during lunch.

For sure, the function teamRatings(r1, r2) should satisfy some constraints:

- teamRatings(r1, r2) ≥ max(r1, r2),

- if we compose a team of tourist and somebody not very skilled (say, green participant), rating of team should be close (a little more) to tourist's rating.

I was offered the following funny model. Image there is team AB composed to two members A and B. Let's try somehow to compare it with individual participant C. In my model instead of single contest "A+B vs C" we will make two contests "A vs C" and "B vs C". If at least in one contest of two won member of AB, then the team won. If both contests won C, them C won.

This model doesn't consider any team-work, but it fairly tries to consider chances of both participants A and B to overcome C.

Now we know rating of C and winning probability of AB over C, we can inverse Elo formula to find rating of AB.

There is a trick that calculation of team rating depends on opponent C. How to choose the most relevant opponent C? It is easy to show that changing rating of C the calculated team rating changes monotonically. I like an idea to choose such rating of C that calculated rating happens to be equal to C. In other words let's use such opponent that equally skilled compared to the team AB. Binary search helps to find such opponent's rating (closed formula also exists).

So we following code aggregates ratings of several individual participants to the single value:

long double getWinProbability(long double ra, long double rb) {

return 1.0 / (1.0 + pow((long double) 10.0, (rb - ra) / 400.0));

}

long double aggregateRatings(vector<long double> teamRatings)

{

long double left = 1;

long double right = 1E4;

for (int tt = 0; tt < 100; tt++) {

long double r = (left + right) / 2.0;

long double rWinsProbability = 1.0;

forn(i, teamRatings.size())

rWinsProbability *= getWinProbability(r, teamRatings[i]);

long double rating = log10(1 / (rWinsProbability) - 1) * 400 + r;

if (rating > r)

left = r;

else

right = r;

}

return (left + right) / 2.0;

}

Once again, I understand that this model is not absolutely correct. But I don't think that absolutely correct model exists. I think that this model is reasonable and has a right to exist. What do you think?

tourist > Petr+Petr?

This is a philosophical question. But why not? Ratings differ for almost 200. It means that Gennady overcomes Petr with probability ~0.75.

The intuition suggests that a dual-Petr team should solve a contest faster than a single-tourist team because of twice as much problem-solving threads. :)

What rating would team consisting of 228 Bredors have?

2773

I think that this model is a pretty good first step, it essentially simulates a thinking contests where teammates try to solve the problem individually: the lone programmer has to come up with the idea faster than the fastest member of the team. This doesn't take into account two things:

However, one think is clear: if you keep adding participants their added value decreases. Your formula already gives less probability of winning a team of 3x2000 against a team of 2x2000 than a team of 2x2000 against a team of 1x2000. Nevertheless I think that a better model would raise getWinProbability to a coefficient that decreases from 1 to 0 (sorting the teammates first).

I also feel your model is reasonable, but I would ensure that in those first contests the rating of lone programmers as a group doesn´t change and the same for team programmers, which,if both groups are large enough, is a reasonable assumption.

I once thought about log(exp(rating_a/C) + exp(rating_b/C))*C. We may add D*(sz-1) points to the rating if team size is sz. I suggest C=400 and D=200.