Hello!

I am pleased to report that two rounds of Codeforces have gone quite well in terms of the work of Codeforces, I am very glad about it. These days nights I spent in a profiler, fixing the code, researching the settings of MariaDB.

In addition, I managed to allocate several hours on Sunday (to be honest, until Monday morning) to finish the long-planned innovation.

Meet, diagnostics of solutions in C++!

Many Codeforces visitors are already tired of the questions of less experienced participants: "Why does my solution not work on some test on the Codeforces servers, if I locally launch it and it works correctly? You have the wrong compiler/servers!" In 99% of cases this is an example of undefined behavior in a program. In other words, the program contains mistakes that, due to a number of circumstances, are not reproduced at local launch, but are reproduced at launch on the Codeforces servers.

Sometimes, it's not easy to notice such a mistake. A small overflow of the array can lead to an incorrect answer on the test and to an runtime error of the program.

In g++/clang++ there is a remarkable tools called sanitizers. It's such a way to compile a program in a special mode so that when it's working, it will check it for undefined behavior (and some other errors) and, if any, print them to stderr. The drmemory (it is similar to valgrind, but for Windows) has similar functionality, which starts the program in a special mode to detect errors. With such two diagnostic launches, the performance of the program suffers tremendously (the program is executed 5-100 times slower and requires more memory), but often it's worth it.

Now automatic diagnostics in some cases will prevent a question like "Why does not work ???", indicating the error or its appearance!

If your solution:

- written in C++,

- finishes with a verdict "wrong answer" or "runtime error"

- on this test worked extremely quickly and consumed a little memory,

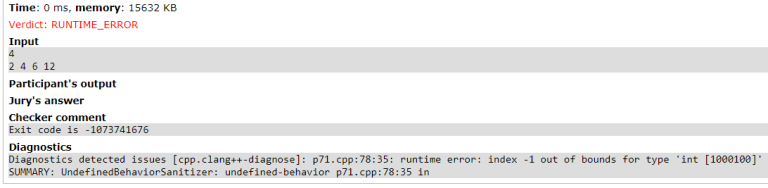

then it will be restarted using special diagnostic compilers (clang ++ with sanitizers and g++ with drmemory). If an execution error occurs during this launch, the diagnostics log will be displayed in the test details in the "diagnostics" section. Of course, this entry will contain a technical report in English, but often it will indicate to you the error of the program. Often it contains the cause of the mistake and even the source code line. If the diagnostics are not displayed, then at least one condition above is not fulfilled or the diagnostics has not detected any errors.

Example of the diagnostics: here it is written that there was an array out of range error in the line 78.

Thus, the diagnostics will sometimes help you to find a mistake like "out of bounds error", "signed numbers overflow", "uninitialized variable", etc. Carefully read the diagnosis and do not make such mistakes in the future!

I wish your programs did not fail. I hope the innovation will be useful!

There are big plans as it is useful to apply such diagnostics. Do not go far, wait for news, soon there will be more innovations!

P.S. Also, diagnostic compilers are simply available for use. For example, you can use them on the "Custom invocation" tab. I recall that the program runs many times slower and consumes more memory in diagnostic mode.

It's all good, of course. But, please, fix the issue with virtual participating in gyms and contests.

UPD: Fixed

What is the issue? Please, write the details.

When I register in virtual participation, it seems that contest has already started. However, there is no countdown.

Fixed. Thank you for information.

Sometimes when we participate in a GYM contest as a team,sometimes I get a message as displayed in the photo attached but my teammates don't face that kind of error,so the problem might be on my system but I couldn't figure any solution.What might be the problem?

I have an approximatly personal issue. When I intende to hack some solution in contests, the source page ask me to download Adobe Flash Player for loading the page (I hacked some solutions before.

There was not that issue. So, it seems to be an upgrade).

But our country is denied from downloading that program. So, is there an ability to return to the previous version of that page, please?!.

UPD: My previous hacks were in educational rounds, so I was able to access the solutions from the submissions ID. So, it may be not an upgrade as I said. But I still hope this issue to be considered.

I believe this will be fine if you use Mozilla browser for hacking.

I'm realy using it.

try using google chrome.

I will do this, but I'm wonderring, why will the browser solve this problem? Is it the features in the browser which can do what Adobe Flash Player do?

Mozilla firefox requires flash player installation for running flash applications but google chrome comes with adobe flash player plugin.(link for reference)

It solved my problem. Maybe it solve yours too. :)

OK, Thank you very much :).

Thank you anyway for your advice.

Thank you anyway for your advice.

same here

Thanks MikeMirzayanov.

Great work! I see that you use -O0 for clang and gcc with diagnostics. Sanitizers work well even in release mode (at least on Linux). That's how I typically use them and stack traces always were on point as far as I remember. Is Windows different?

If you got clang 5.0 to work, may be it is time to replace MSVC 2010 with Clang? Clang tries to support MSVC compiler extensions so for the most people there would be no difference. It is not as mature yet as Clang on Linux or Visual Studio though. Alternatively, upgrade MSVC to 2017, since you now have it installed anyway.

plus to MSVC 2017

So, basically this update gives an opportunity to see pretests during contest?

Codeforces shows diagnostics only if you can view judgement details on tests. Am I right that during a round you can't see submission judgement details on pretests?

So, the new diagnostic run will happen only in upsolving, not during a contest, right?

Edit: Or some wrong answers will turn into runtime errors, but people still won't see the reason?

It works also during a contest but results are not available to participants. I have plans to show detailed report on example tests only.

Diagnostic launches do not substitute verdict, they only add diagnostics message to report if exists.

>> I have plans to show detailed report on example tests only.

Bad idea. Everyone should be equal. And it should be also disabled in custom tests, at least during contests.

Or show me my out of bounds errors when I write in Pascal, show me my

Integer == IntegerortreeSet.remove(badObject)warnings when I write in Java.Nobody forbids you switching to C++. Whining about advantages of C++ because you chose some different language is kinda not justified. C++ has such diagnostics and UB detector for already some time, this is just making it a little bit easier to use for beginners.

Too fat. Java has PMD since ancient times, and what? Mike isn't going to enable it. C++ users will get advantage of third party tool executed on samples, and it's unfair.

Does it bother you that I write contests on Linux with C++ sanitizers switched on? I don't see any problems with detailed report for example tests — anyone can run solution locally and get the same information.

There are variations in the rules at different sites each having its own definition of fairness between languages. So it is not an absolute point and we shouldn't stop trying new variations. You just need to get used to new rules and then you might call absence of sanitizers as unfair.

Java already does not have Undefined Behavior. You always get On Java a run-time error for out-of-bounds access. This update try to close the gap, not to create a new one.

The feature is great, but I have a question here, you say "the program is executed 5-100 times slower and requires more memory", and you are planning to enable it during contests, wouldn't this put a greater risk in having rounds ruined by a long submission queue?

Can I say a question that has nothing to do with the topic?

Often times when I view my own code in the standings I will not be able to load it.

But I found that I can successfully see the code of others.

And i found this on the console.

that is generally caused by the GFW

MikeMirzayanov Hi, I just want to request a new feature that I hope you can spend some time considering it. I think Codeforces should enable users to judge a single test (in practice of course). i.e, a test of users' choosing. I have debugged quite many problems on Codeforces, and every time that I get a WA or a TLE at a big test, after debugging, I have to resubmit and wait for my code to run through all previous tests. That is not only time-consuming for me but also put more pressure on the judging system. I just wonder why a simple feature hasn't been added to Codeforces, which will bring enormous benefit to both users and the judging system. Thank you for reading and if because of some reasons this feature can't be implemented, please just state some reasons.

Ohhh this feature is amazing!

Sorry for inconvenience in the following comment, but we always complain from this issue, and it hasn't been fixed yet.

When a user opens the coach mode, all his submissions disappear from the standing. Also when he adds a gym contest to the group who is managed by him, he will face the same issue.

All of us will be pleasured if you fix this bug soon.

Thanks

I think these are intended.

So that Problemsetters can test some submissions without polluting the standing.

This would help me so much at IOI...

We live in 2017 and CF is in 3082 now !

Can we know the exact options with which the code will be compiled to get these diagnostics? (I'm sorry if you've already published them, I couldn't find them).

I believe it will be really useful when participating in official ICPC/Olympiads contests.

Thank you!

Just read the manual https://gcc.gnu.org/onlinedocs/gcc/Instrumentation-Options.html https://clang.llvm.org/docs/index.html. Read Codeforces http://codeforces.net/blog/entry/15547 and don't repeat newbie mistakes http://codeforces.net/blog/entry/54610 .

Thank you for pointing to these links. However, the exact options for diagnostics is still relevant and Important, because: these options have bugs that vary according to platform: for example: Map Erase causes a crash on Mingw and Crash on cin or getline to empty string on MinGW gcc with Debug mode. Also not all diagnostic flags work well with each other , more sanitizers are added overtime, and it helps to know the exact compiler options as in About the Programming languages in order to replicate the results locally.

(I like to argue so take this comment lightly). Use of exact options is for diagnostics is relevant in very rare cases. For the purpose of finding bugs in your own code as asked above exact flags aren't necessary. Just enable everything that your toolchain supports and doesn't cause too many false positives. Some combination of diagnostic flags might not work well together but to learn that you read documentation instead of blindly repeating what Mike chose for Codeforces servers due to reasons that might not be relevant for your setup. More sanitizer being added is even more reason to read the release notes for latest version of toolchain that is available on your computer instead of using exact options from few year old compiler in CodeForces. The whole point of sanitizers is to replace undefined behavior which behaves unpredictably in different configuration with intentional safety checks that have clear error message and repeatable behavior.

Not much reasons remain for exact options to replicate results locally. One of them is compiler and standard library bugs. Those occasionally happen but they are rare. There might be one or two real compiler bugs a year found at Codeforces compared to one bug in solution a day that can be found by reading documentation, using the sanitizer or other error detection tools. Compiler bugs being rare bugs that only happen when sanitizer is used should be even more rare.

Although usually there are other solutions I wouldn't complain if information about used compilers and flags was automatically generated by the same code that prepares compiler calls and thus always up to date. CMS does something like this.

Just seeing some people accidentally used the diagnostics during contest submission and got very confused, perhaps don't let us pick this for submissions during contests?

It may make sense to send under diagnostics during contest -- if you think that first several tests are easy ones, you may send under this compiler until you get AC/TL and then resubmit under usual one.

I don't know if this would be practical or not, but it would be nice to run the diagnostics on all submissions for at least one test case regardless of whether they produce the correct answer or not as it's annoying for me to see people getting away with undefined behavior like small array out-of-bounds accesses, and also memory leaks. In my opinion, even if these programs produce the correct output, they should still be considered wrong.