Introduction

As the use of AI becomes an issue that will increasingly spark debate within competitive programming communities, we aim to provide some general advice on catching cheaters using AI through examining a specific user and their submissions on yesterday's contest Codeforces Round 972. As stated by MikeMirzayanov, it is unacceptable to use AI during contests save for certain cases outlined by this blog.

We examine hypeskynick and his submissions both prior to yesterday's contest as well as during the contest. We discuss four main categories with evidence in each category that illustrate suspicious behavior, and the likely misconduct of the user. We examine this user since he appears to be genuine on the surface, unlike blatant cheaters, yet when examined deeper his integrity disappears. As a means of comparison, we will refer to AryanDLuffy who is blatantly known to be cheating using OpenAI o1-mini per this blog.

Stylistic Differences:

It is common to see stylistic differences when observing someone who submits code that is not written by themselves. People tend to develop their own stylistic programming choices as they learn a language and use it more frequently. Thus, it is suspicious when a user's submissions exhibit stylistic differences in contest that contrast their typical submissions.

In regards to AI, we can note that OpenAI's o1 has likely been trained on a plethora of code that is not limited to competitive programming; thus, its stylistic choices will often exhibit some sort of correctness and formality that is required in more practical applications of programming (which is rare to see in competitive programming).

Let's examine hypeskynick.

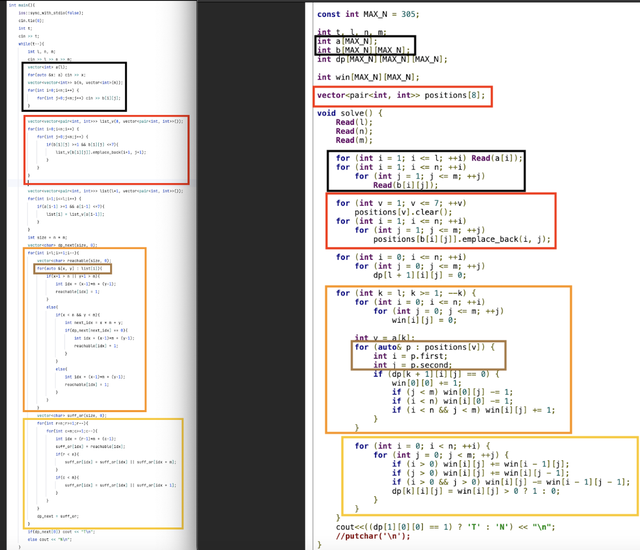

In both his second and third attempts to problem E1, he used memset and memcpy. However, he has never used these functions in any of his submissions prior, including submissions from other problems in this contest. In his initial submission to E1, we can see that he manually loops to fill arrays with values, and this just goes to show that LLMs will have variability in their output for non-functional changes when asked to fix their code. This is something useful to note when catching AI cheaters.

Moreover, in some of his submissions (the ones that we believe he used o1 on), he used pre-increment (++i) over post-increment (i++). In none of his submissions before this contest has he used pre-increment, but in many of his submissions this contest he used pre-increment.

He also used emplace_back on his second submission to E1. This is usually done for some sort of clarity since push_back() with pairs requires initializing the pair and then copying it to the vector, while emplace_back() constructs the element in place. Yet, he has never used this before. It is another one of those stylistic choices someone either does or doesn't.

Importantly, his usage of solve() was very inconsistent in this contest. Pre-contest, he always uses solve(). However, on some submissions he did not use solve() and instead kept his logic in the main() function.

What is also suspicious is his inconsistent use of his I/O library and macros, where he uses cin/cout randomly in some of his submissions on E1, while using his I/O library Read/Write on other problems (e.g. C).

Additionally, his importing of certain headers such as algorithm or cmath while also having bits/stdc++.h is suspicious. This seems like something a LLM would output for "correctness". An actual human would not add such redundant headers and waste their time during contest.

Examining WA Submissions:

Often, AI produced code may produce wrong outputs, code that might not even pass sample cases, or code that fails on later test-cases. When AI produced code is submitted by a user and fails, and a user prompts the AI to reproduce working code, there are usually large differences in the code, as the AI will usually rewrite the entire code rather than making minor adjustments as one would in an IDE.

Let's examine hypeskynick.

Similar to AryanDLuffy, hypeskynick had trouble solving B1/B2, even though they are objectively trivial problems (1000/1100 rated on clist). In both his first and second failed submissions, hypeskynick did not use his template and instead kept all his logic in the main() function. These two submissions greatly contrast his third submission which passed. He had trouble solving B1/B2 with o1 and resorted to writing his own code, which is why he took so long. His third submission had his template and was significantly more convoluted. While it could be contested that he chose not to use his template as he thought B1/B2 was easy, he actually used his template on his submission for A, which was significantly easier.

It is incredibly important to also compare the WA submissions, as noted in the stylistic differences section for problem E1, AI will produce different code and unexpected non-functional changes when asked to rewrite its code or fix an issue.

Comparing to known AI outputs

Since in this contest we have clear submissions by OpenAI's o1-mini model by AryanDLuffy, we can make comparisons with this in order to determine if someone is cheating or not.

Let's examine hypeskynick.

Nick's failed submissions for B1/B2 are extremely similar to those of AryanDLuffy's. Both users first failed B1 on pretest 2, then failed B2 on the sample test. We know that o1 had significant trouble solving B1/B2, which is also reflected in his WA's.

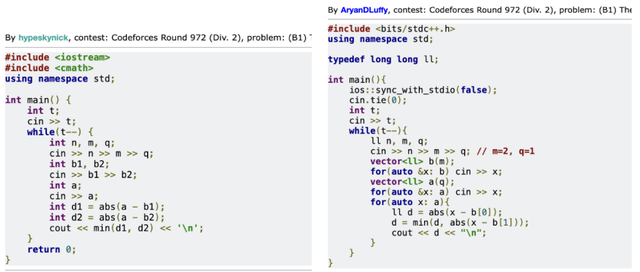

Let's take a look at their WA on their B1 (left is hypeskynick):

Practically the same, save for non-functional differences related to the problem input. Coincidence that they have the same code and got WA on B1 test 2? I think not.

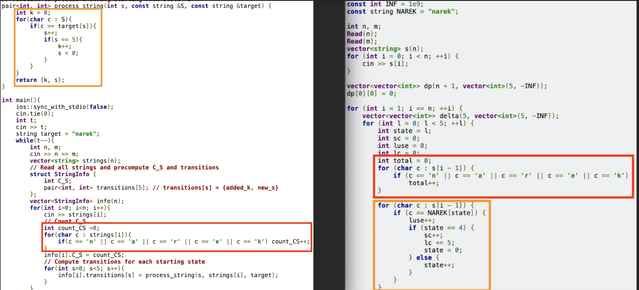

Start of their C codes are also very similar (right is hypeskynick):

Similar E codes too (right is hypeskynick, left is aryandluffy code with comments removed for clarity):

General suspicious behavior

As with all cheaters, their performance in contests can usually hint at suspicious behavior or some sort of misconduct. Of course, it is completely insufficient to use this as a means of accusing someone of misconduct; however, combined with previous evidence, with this general suspicious behavior, we can allude to the fact that a user is cheating.

If we examine the performance of hypeskynick, it is clear that this contest has been some sort of outlier for him.

While he has not previously ever gotten a performance over 1750, he achieves a ~2050 performance (according to Carrot) on the contest yesterday. He took 40 minutes to solve B1/B2, but only 14 minutes to solve C, which is rated 1821 in clist. He also only has 316 solved problems in CF, which is very small for his performance. But more importantly, he has only solved 12 problems with a rating higher than 1700. And to get E1, which is rated 2125 on clist is quite suspicious.

Conclusion

It is very difficult to determine whether someone is clearly cheating or not. However, it is evident that hypeskynick has cheated using o1. Cheaters who are "smart" and erase evidence of such irregularities might not be caught so easily. Anyways, we hope that this blog is able to give the community some sort of insight on ways to examine a user if you are suspicious of their behavior.

We would like to ultimately state that it is disheartening to see people cheat on such contests that are meant for learning and enjoying the hobby of competitive programming. Remember, the learning process is much more important than a few artificial numbers. Failing to solve a few problems just means you have room to improve. Ultimately, it is through struggle and adversity that you build character and become a stronger person--the results are merely an added benefit.