We would like to create a model that which when given a game state, it predicts the best move.

Lets say our game is the simple Tic Tac Toe. It is a small game and we can train the AI for it in a handful of minutes.

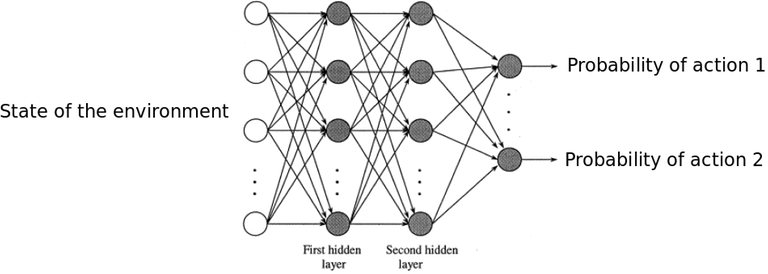

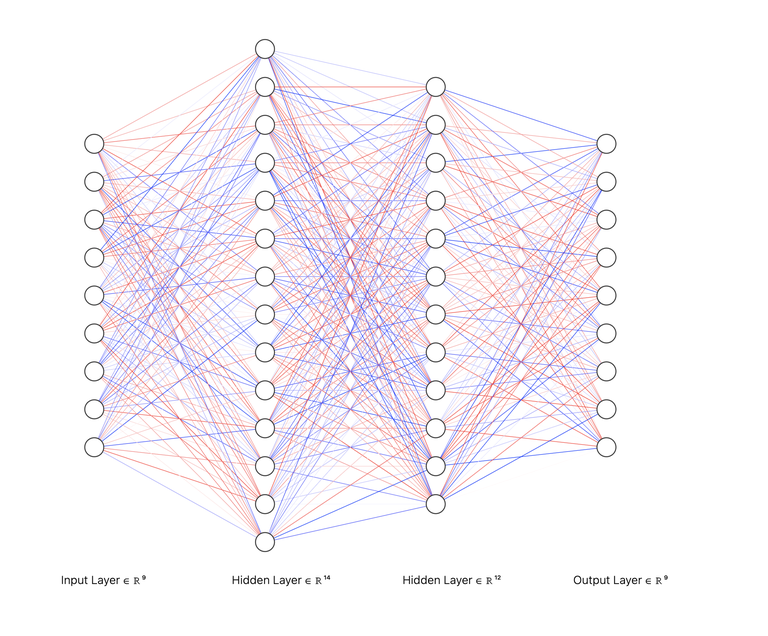

Here is our example neural network, reduced the number of hidden layer to avoid cluttering.

In the above network, the inputs are going to be board states. For example,

Lets assume the neural networks always predicts from the perspective of that the turn is of player -1.

If we can build a neural network, we can just flip the board and predict for the opposite player, easy peasy.

I use the algorithm mentioned at OPEN AI blog for training. Its almost same for the CartPole Reinforcement Learning environment from OpenAI GYM https://spinningup.openai.com/en/latest/algorithms/vpg.html.

Also please read into the blog http://karpathy.github.io/2016/05/31/rl/ from Andrej Karpathy from a beginner perspective.

So the training algorithm looks like this: 1. Run a number of simulations / battles / episodes 2. For every simulation — Run a play till the end of game, i.e. either someone wins, or the game ends in a draw. — Calculate the reward for the player. — Feed it into the Neural Network model for training.

- If we run this enough times, the Network gets better at avoiding the bad moves and maximizing the probability of good moves. And voila, we have it right here, create a model that is better than the opponent.