Preface

When the problem came out, someone asked for an explanation of how people come up with such solutions. I wanted to write this blog even before that, but I've just been busy. Anyway now, two months later, I finally had the time to put this blog together.

But the reason I really wanted to write this blog is that the solution to 1503C - Travelling Salesman Problem has a very much non-obvious greedy solution. From reading beginners' comments, it seems that a lot of people have this implicit belief that greedy algorithms are invented by guessing: for example, guessing an order in which to process things, guessing how to process things and then maybe proving correctness if you have time (but really skipping it, because who cares, right?). I think some contest sites even perpetuate this idea by presenting a greedy algorithm in the editorial without any kind of proof or explanation where this comes from. Even here, you may have tutorials that say "here's the algorithm" and after that "here's the proof". While it is convenient (and completely fine!) to present the solution in this form (algorithm and then proof), it's not necessarily how the solution was invented.

While you can solve problems this way (guessing and then proving (or not proving)) and I have solved problems this way, it is quite unusual for me. If someone were to think (perhaps implicitly) that greedy algorithms are invented like that, they'd be shocked when they read the solution to 1503C - Travelling Salesman Problem: how could anyone guess that, how could anyone come up with ordering the cities like this and then doing things like that?

And the answer is that you really can't. It would be very hard to guess this solution unless you already have some insights to the problem. That is roughly how I feel I solve problems: try to really understand the problem, gather insights about it. And at some point, you have found enough observations that you can piece together a solution. The solution isn't proved afterwards — the proof develops alongside the solution. It isn't always like this, but very often it is. (Of course, there is some guesswork involved, but it is more "I guess I should think about this thing", not "I guess the entire algorithm").

In the following, I'll try to show you what I mean.

The problem

Given $$$n$$$ cities and two arrays $$$a$$$ and $$$c$$$ of length $$$n$$$, you want to visit every city once, starting from city $$$1$$$ and returning to city $$$1$$$. The cost of going from $$$i$$$ to $$$j$$$ is

and you must minimize the total cost. We have $$$2 \le n \le 10^5$$$ and $$$0 \le a_i, c_i \le 10^9$$$. $$$~$$$

The path to the solution

I tried to keep the following as true to my thought process as possible, so this is my path to my solution and not necessarily the shortest path to the cleanest solution. And maybe even not the clearest explanation because I really did try to mirror what was going on in my head.

Hmm.

We have to pay $$$\max(c_i, a_j - a_i)$$$ every time. If you ignore the $$$c_i$$$ terms, then the $$$a_j - a_i$$$ terms cancel out. So, let's think about the extra $$$c_i$$$ that we may have to pay sometimes.

Actually, let's do it the other way around. We always pay at least $$$c_i$$$ for each town, right? So it would make sense to think of $$$\sum_i c_i$$$ as the baseline. And to think only about the extra $$$\max(0, a_j - a_i - c_i)$$$ that we have to pay sometimes.

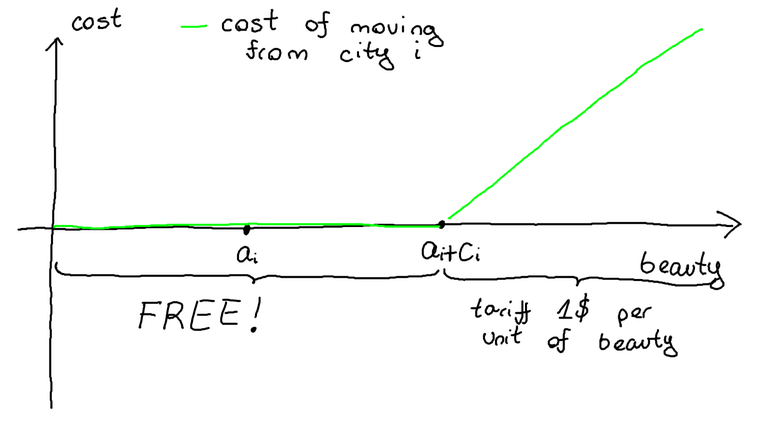

Actually, $$$\max(0, a_j - a_i - c_i) = \max(0, a_j - (a_i + c_i))$$$. So from city $$$i$$$, flying in the direction of decreasing beauty is always free. Flying in the direction of increasing beauty is free to a point, and after that we are hit with a tariff of 1 dollar per beauty. Visually, I imagine it something like this:

Some time passes without much ideas

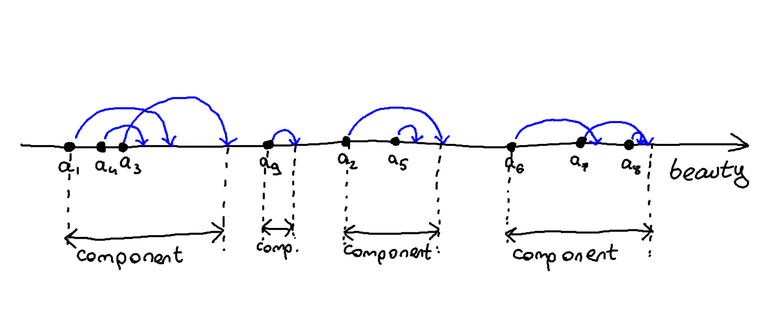

The idea of cities as points on a line seemed useful, let's do more of that. Draw from $$$a_i$$$ an arc to $$$a_i + c_i$$$ to denote the area of free travel from city $$$i$$$. If I do this for many steps, "connected components" (sorta) arise:

Experience tells me that within a component, I can move from anywhere to anywhere, free of charge. (See for example DFS-tree blog, observations 5 and 6 for a proof that can be adapted and the source of this intuititon).

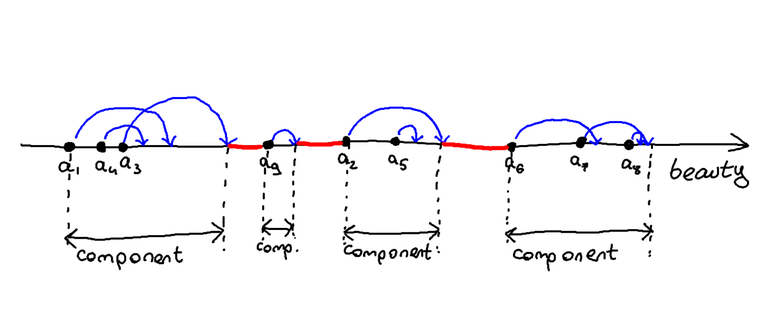

Of course, that's just normal shortest paths on graphs, without all this stuff about visiting every vertex once and only once. But I'm hopeful. You see these red bits in this image:

Those are the bits that I absolutely have to pay for. Do I have to pay for anything else? If I enter each component through the leftmost vertex, find some Hamiltonian path within the component from the leftmost vertex to the "most powerful one" (i.e. the one with highest $$$a_i + c_i$$$) and exit through the most powerful vertex, then I will only need to pay for the red bits. Can I always find such a path in a component?

Oh, I think I can't. For example, here:

I have to enter the component through the vertex $$$u$$$, and exit through $$$u$$$ too. There's no opporturnity for me to visit vertices $$$v$$$, $$$w$$$ and $$$x$$$.

A bit of time passes

But wait, I don't actually need to visit all the vertices of the component. It is enough for me to find some free path from the leftmost vertex of the component to the most powerful one, not visiting any vertex twice. Then, I'll just visit all the missed vertices on my way back from right to left. Can I always do that? Yeah, it looks like I can: if I am at the most powerful vertex of the component, there will always be some vertex to the left that can reach this vertex. And there will always be some vertex to the left of that vertex that can reach that vertex, and so on, until we reach the beginning of the component.

Ok! Now I have constructed a Hamiltonian circuit that will take me through each city and will always pay for the "red bits" and nothing else. It's also clear that this is the best solution because it's easy to see we absolutely have to pay for the red bits. So all that's left to do is implement code that will calculate the total length of the red segments. It looks like this:

sort(arr, arr + n); // sorts by a

ll ans = 0;

ll mxac = 0;

for (int i = 0; i < n; i++) {

if (i != 0 && mxac < arr[i].a) {

ans += arr[i].a - mxac;

}

mxac = max(mxac, arr[i].a + arr[i].c);

}

Add to ans the sum of $$$c_i$$$ and output that. That's it!

Final remarks

This was a bit of an experimental blog. I don't think I'll be doing many of them in the future, because it is quite rare that I can put my thought process into words so clearly. Also, I wanted to write this as a demonstration that solving a greedy problem doesn't mean guessing the entire solution at once, but I don't think such blogs are very useful as a general resource.

In any case, thank you for reading, and thanks to Monogon for creating this nice problem!