I've been going through the CSES Problemset with the goal to write shortest (but not obfuscated) Python solutions.

Due to smaller competition pool, I managed to accomplish this on a variety of tasks. But cutting characters sometimes introduces undesirable slowdowns, mostly in IO.

The problem inputs with $$$2\cdot 10^5$$$ to $$$10^6$$$ integers are big enough to require third or half of the execution time just to read/print and very often result in TLE. In that case it is important to be sure you are reading as quickly as possible. In C++ writing the same algorithms works well even with inefficient IO.

I noticed io.Bytes does not help at all and adds too many characters. Printing gets as fast as possible (<1% of exec time) with

print(' '.join(map(str, S)))

where S is a list of answers. Having multiple calls to print or print(*S) will be too slow.

Similarly, calling input or sys.stdin.* repetitively also isn't optimal. What I usually do is:

n, m, q, *e = map(int, open(0).read().split())

# example of handling unpacking

E = [(a, b) for a, b in zip(*[iter(e)]*2)]

This is fast, but the snippet below is much faster:

# fast variant

e = [*map(int, open(0).read().split()]

n, m, q = e[:3]

E = [(a, b) for a, b in zip(*[iter(e[3:])]*2)]

The expansion *e, = map(int, open(0).read().split()) takes ~30% more to execute (yet it saves a single character).

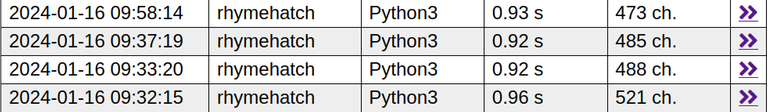

A good recent example is on Distance Queries where doing the fast variant gives a lot of room to not TLE:

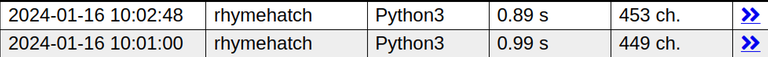

Or Counting Paths where I couldn't get it to pass without the fast variant. Adding code optimizations to already short code in most cases does not help. Short code, if not heavily obfuscated, means that most of the useful invariants in the solution are fully exploited. Examples of where you might think you're slow when trying to write short programs:

- doing binary lifting 18 times every query vs doing it only

depth[a].bit_length()times will improve exec time but compared to IO it is insignificant. To supportdepth[a].bit_length()you have to extend the DFS to maintain this data and gains in exec time soon disappear, - list[(int, int)] as your DFS queue is slower than

list[int], using twoQ.pop()operations to get all of the metadata is faster, takes more characters but still nothing compared to IO, Q.append(x)is faster but not shorter thanQ += x,yet the speedup is negligible in the grand scheme of things,A = L.copy()vs*A, = L

Of course, there might be a case where choosing the optimal language constructs is necessary but I haven't stumbled upon such case yet.