Hello Codeforces,

I will present today, and in any day in the future, a means to correctly calculate a corner case of the well known small-to-large trick, and how this can be employed to solve a set of certain problems. This blog is written with encouragement from the Month of Blog Posts initiative.

The standard example.

Let's try to solve the following problem: Cat In a Tree, BOI 2017. Although this problem can be solved employing some greedy algorithm, assume we have no idea what greedy is or how it could be correct, and revert to the basics:

The standard DP.

How can we formulate a DP on this problem? Well, what we need to keep track of is how can we select multiple nodes such that they are all at distance greater than D. When we are considering a correct solution on a subtree and we want to merge it with that of its brother, only one parameter is of importance: the distance of the closest chosen node to the root (As much as any other pair of nodes that traverse their common ancestor would have their length at least as long). And as this seems to be sufficient, we find that the following DP definition is sufficient: $$$dp_{\texttt{node}, \texttt{closest}} = $$$ the highest cardinal of any solution included in the subtree of the node $$$node$$$ such that the closest node chosen is at distance $$$closest$$$

The transition from the sons into their father would look like the following (for two sons $$$s1$$$ and $$$s2$$$): $$$dp_{\texttt{root}, \texttt{min+1}} =\max(dp_{\texttt{s1}, \texttt{min}} + dp_{\texttt{s2}, \texttt{x}},dp_{\texttt{s1},\texttt{x}} + dp_{\texttt{s2}, \texttt{min}})$$$ for any $$$x + min + 2 \ge D$$$ and $$$min \le x$$$

It is clear that the transition for more than two sons is made progressively by merging the current calculated dp in the root with yet another son. Furthermore, we can tweak the definition to represent instead the highest cardinal of any solution whose closest chosen node to the root is at distance at least $$$closest$$$ (i.e. perform suffix maximums). This will help reduce the unnecesary linear traversal of index $$$x$$$, leaving only $$$min$$$ to be iterated.

To sum up these observations in some comprehensive formula, let's rephrase the transition algorithm as follows:

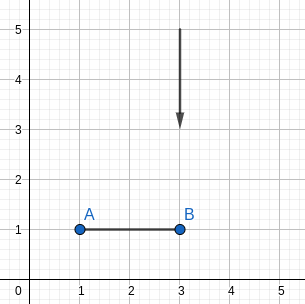

- At first, we take the $$$dp$$$ array of a son $$$^\dagger$$$. We now assign $$$dp_{\texttt{node}, \texttt{closest+1}} = dp_{\texttt{son}, \texttt{closest}}$$$. We also assign $$$dp_{\texttt{node}, \texttt{0}} = dp_{\texttt{son},\texttt{D-1}} + 1$$$ (i.e. adding a new node to the solution).

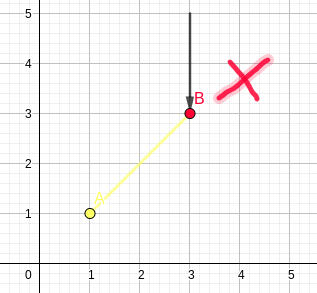

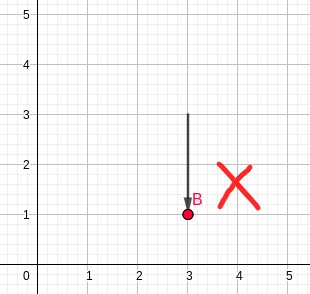

- To further merge this $$$dp$$$ array with that of a different son, we assign: $$$dp_{\texttt{node}, \texttt{T}} = \max( \,(dp_{\texttt{node},\texttt{T}})^{v_1}\,,\,(dp_{\texttt{node}, \texttt{H}} + dp_{\texttt{son}, \texttt{T}})^{v_2}\,,\,(dp_{\texttt{node}, \texttt{T}} + dp_{\texttt{son}, \texttt{H}})^{v_3}\,)$$$ where $$$H$$$ is $$$\max(T, D-T-1)$$$. Then, adjust to preserve the $$$dp$$$ definition by setting $$$dp_{\texttt{node}, \texttt{T}} = \max( dp_{\texttt{node}, \texttt{T}}, dp_{\texttt{node}, \texttt{T+1}})$$$. (Markings $$$v_1, v_2, v_3$$$ are footnotes, not exponents)

Turns out that $$$\dagger$$$ makes this entire algorithm $$$O(N)$$$.